An project at the University of Oregon Cognitive Modeling and Eye Tracking Lab that aims to explain and predict user performance in complex auditory and visual multitask environments by means of computational modeling and empirical human data collection, including eye tracking.

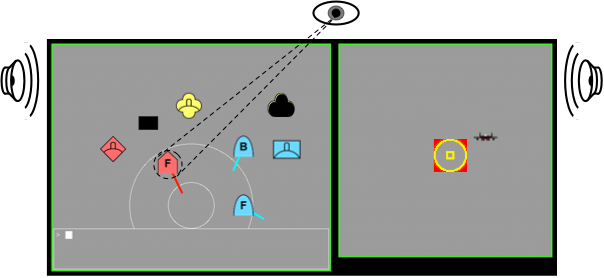

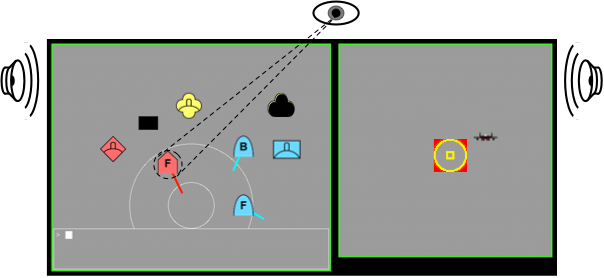

The above diagram shows a simulated screenshot from the task environment, with a user's eye looking at blips on the left side of the screen, and spatialized sound coming from stereo speakers to help guide the user's gaze to the blips that need to be classified.

Watch a QuickTime

or MP4

video with 3D audio and the user's gaze superimposed as a small red dot.

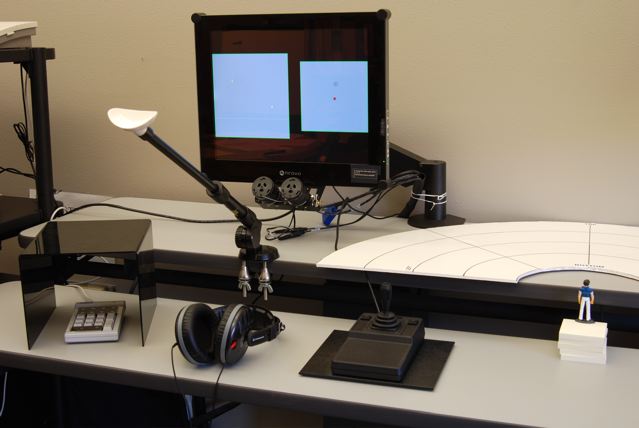

The above photo shows the task environment for collecting human data, including a computer display with an attached eye tracker, chinrest to help with eye tracking, headphones for playing 3D audio, and a keypad and joystick for user input. On the bottom right, there is also a small action figure with a cardboard cutout to remind the user of the mapping between the visual and auditory space that is used to identify the location of blips on the screen that need to be classified. You can watch a QuickTime or MP4 video of the task being executed. The video includes the 3D audio that helps to cue the participant as to which blip needs to be classified next. The user's task is to classify the blips as "hostile" or "neutral" based on their color, shape, speed and direction. The video shows the user's gaze superimposed as a small red dot. Note that the display is "gaze-contingent" in that it only shows the contents of the window where the user is looking. This enables the experiment to specifically study how people can use 3D audio to keep track of what is happening in a secondary visual display that is just out of range of a primary visual display, as would be useful for complex multi-display tasks such as air traffic control.

Anthony Hornof (Principle Investigator), Yunfeng Zhang, Tim Halverson, Erik Brown, Andy Isaacson, Kyle Vessey.

The approach includes: (a) Focus primarily on one specific dual task and corresponding complex display which together provide a solid foundation for exploring a wide range of auditory-visual perceptual integration issues. (b) Conduct exploratory cognitive modeling to explain data that has already been collected for that task. (c) Carefully redesign and re-implement the software used to collect human data in a tightly controlled multimodal multitasking environment, including both eye tracking and spatialized audio. (d) Conduct a new empirical study with eye tracking to evaluate the predictions of competing models of the task that were built before eye movement data were available. (e) Refine the models and the modeling framework based on eye movement data. (f) Use the models to guide the design of new, complex, improved auditory-visual displays. (g) Build a prototype display using the model as a design assistant and initial test user.

The approach deviates from the original proposal simply with an increased emphasis on the redesign of the software used to collect the human data, and thus the addition of step (c), above.

These videos illustrate the three independent variables: wave size, gaze contingency on or off, and sound cueing on or off. The video shows the user's gaze superimposed as a small red dot. Note that the videos are quite large (1024x1280) on your screen. Headphones help to illustrate the 3D audio.

Medium Difficulty: Medium wave (6 blips), no gaze contingency, no sound QuickTime or MP4

Difficult: Bigger wave (8 blips), yes gaze contingency, yes sound QuickTime or MP4

|

|

Hornof, A. J., & Zhang, Y. (2010, to appear). Task-constrained interleaving of perceptual and motor processes in a time-critical dual task as revealed through eye tracking. To appear in Proceedings of ICCM 2010: International Conference on Cognitive Modeling, 6 pages. |

|

|

Hornof, A. J., Zhang, Y., & Halverson, T. (2010). Knowing where and when to look in a time-critical multimodal dual task. Proceedings of ACM CHI 2010: Conference on Human Factors in Computing Systems, New York: ACM, 2103-2112. Honorable mention paper (top 5% of all papers submitted). Video that accompanied paper available in QuickTime or MP4. |

|

|

Hornof, A. J., Halverson, T., Issacson, A., & Brown, E. (2008). Transforming object locations on a 2D visual display into cued locations in 3D auditory space. Proceedings of the 52nd Annual Meeting of the Human Factors and Ergonomics Society, 1170-1174 |

National Science Foundation (NSF) IIS-0713688. $499,591 for 7/1/10 to 6/30/13. Principal

Investigator (PI): Anthony Hornof. Title: HCC: Small: A Computational Theory of Perceptual Integration in Multimodal Multitasking.

Office of Naval Research (ONR) Award No: N00014-06-10054 entitled Computational Modeling and Eye Tracking of Multitasking Performance with Multimodal Auditory and Visual Displays, $439,787 for 10/1/05 to 9/30/08, Anthony Hornof, Principle Investigator. Any opinions, findings, and conclusions or recommendations expressed in these materials are those of the author(s) and do not necessarily reflect the views of ONR.

Last updated by ajh on 6/24/10