Next: Conclusions

Up: paper-final

Previous: Large-Scale Performance Monitoring and

Empirical performance evaluation of parallel and distributed systems

often generates significant amounts of performance data and analysis

results from multiple experiments as performance is being investigated

and problems diagnosed. Yet, despite the broad utility of

cross-experiment performance analysis, most current performance tools

support performance analysis for only a single application

execution. We believe this is due primarily to a lack of tools for

performance data management. Hence, there is a strong motivation to

develop performance database technology that can provide a common

foundation for performance data storage and access. Such technology

could offer standard solutions for how to represent the performance

data, how to store them in a manageable way, how to interface with the

database in a portable manner, and how to provide performance

information services to a broad set of analysis tools and users. A

performance database system built on this technology could serve both

as a core module in a performance measurement and analysis system, as

well as a central repository of performance information contributed to

and shared by several groups.

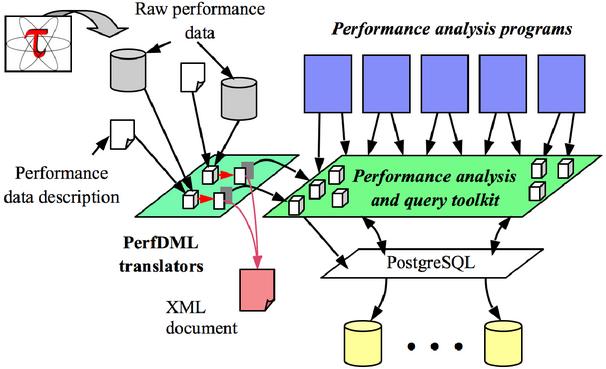

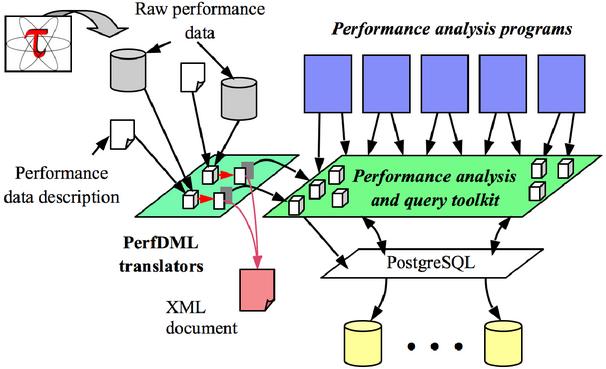

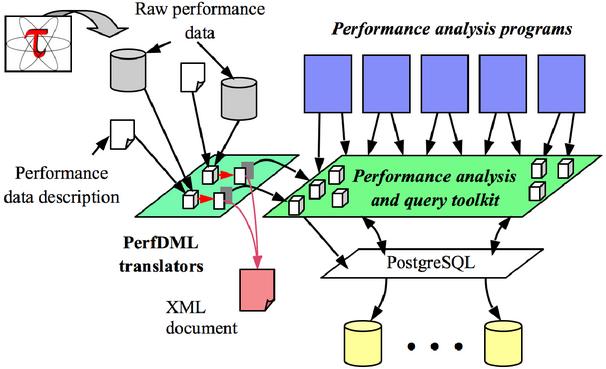

To address the performance data management problem, we designed the

Performance DataBase Framework (PerfDBF) architecture shown in Figure

5. The PerfDBF architecture separates the framework into three

components: performance data input, database storage, database query

and analysis. The performance data is organized in a hierarchy of

applications, experiments, and trials. Application

performance studies are seen as constituting a set of experiments,

each representing a set of associated performance measurements. A

trial is a measurement instance of an experiment. We designed a

Performance Data Meta Language (PerfDML) and PerfDML translators to

make it possible to convert raw performance data into the PerfDB

internal storage. The Performance DataBase (PerfDB) is structured

with respect to the application/experiment/trial hierarchy. An

object-relational DBMS is specified to provide a standard SQL

interface for performance information query. A Performance DataBase

Toolkit (PerfDBT) provides commonly used query and analysis utilities

for interfacing performance analysis tools.

Figure 5: TAU performance database framework

To evaluate the PerfDBF architecture, we developed a prototype for the

TAU performance system for parallel performance profiles. The

prototype PerfDBF converts the raw profiles to PerfDML form, which is

realized using XML technology. Database input tools read the PerfDML

documents and store the performance profile information in the

database. Analysis tools then utilize the PerfDB interface to perform

intra-trial, inter-trial, and cross-experiment query and analysis. To

demonstrate the usefulness of the PerfDBF, we have developed a

scalability analysis tool. Given a set of experiment trials,

representing execution of a program across varying numbers of

processors, the tool can compute scalability statistics for every

routine for every performance metric. As an extension of this work,

we are applying the PerfDBF prototype in a performance regression

testing system to track performance changes during software

development.

The main purpose of the PerfDBF work is to fill a gap in parallel

performance technology that will make it possible for performance

tools to interoperate. The PPerfDB [8] and Aksum

[6] projects have demonstrated the benefit of providing such

technology support and we have hopes to merge our efforts. We already

see benefits within the TAU toolset. Our parallel performance

profile, ParaProf, is able to read profiles that are stored in

PerfDBF. In general, we believe the key will be to find common

representations of performance data and database interfaces that can

be adopted as the lingua franca among performance information

producers and consumers. Its implementation will be an important

enabling step forward in performance tool research.

Next: Conclusions

Up: paper-final

Previous: Large-Scale Performance Monitoring and

Sameer Suresh Shende

2003-02-21