TAU User Guide

TAU preface

TAU Performance System is a portable profiling and tracing toolkit for performance analysis of parallel programs written in Fortran, C, C++, Java, and Python. TAU (Tuning and Analysis Utilities) is capable of gathering performance information through instrumentation of functions, methods, basic blocks, and statements. The TAU API also provides selection of profiling groups for organizing and controlling instrumentation. Calls to the TAU API are made by probes inserted into the execution of the application via source transformation, compiler directives or by library interposition.

This guide is organized into different sections. Readers wanting to get started right way can skip to the Some Common Application Scenarios section for step-by-step instructions for obtaining difference kinds of performance data. Or browse the Quick Reference for a quick reference to common TAU commands and variables.

TAU can be found on the web at: http://tau.uoregon.edu

2. TAU Instrumentation

2.1. Types of Instrumenation

TAU provides three methods to track the performance of your application. Library interposition using tau_exec, compiler directives or source transformation using PDT. Here is a table that lists the features/requirement for each method:

| Method | Requires recompiling | Requires PDT | Shows MPI events | Routine-level event | Low level events (loops, phases, etc…) | Throttling to reduce overhead | Ability to exclude file from instrumentation | Ability to exclude other regions of code |

|---|---|---|---|---|---|---|---|---|

Interposition |

Yes |

Yes |

||||||

Compiler |

Yes |

Yes |

Yes |

Yes |

Yes |

|||

Source |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

The requirements for each method increases as we move down the table: tau_exec only requires a system with shared library support. Compiler based instrumentation requires re-compiling that target application and Source instrumentation aditionally requires PDT. For this reason we often recommend that users start with Library interposition and move down the table if more features are needed.

2.2. Dynamic instrumentation through library pre-loading

Dynamic instrumentation is achieved through library pre-loading. The libraries chosen for pre-loading determine the scope of instrumentation. Some options include tracking MPI, io, memory, cuda, opencl library calls. MPI instrumentation is included by default the others are enabled by command-line options to tau_exec . More info at the tau_exec manual page. Dynamic instrumentation can be used on both uninstrumented binaries and binaries instrumented via one of the methods below, in this way different layers of instrumentation can be combined.

To use tau_exec place this command before the application executable when running the application. In this example IO instrumentation is requested.

%> tau_exec -io ./a.out

%> mpirun -np 4 tau_exec -io ./a.out

2.3. TAU scripted compilation

2.3.1. Instrumenation

For more detailed profiles, TAU provides two means to compile your application with TAU: through your compiler or through source transformation using PDT.

2.3.2. Compiler Based Instrumentation

TAU provides these scripts: tau_cc.sh, tau_cxx.sh, tau_upc.sh, tau_f77.sh and tau_f90.sh to compile programs. You might use tau_cc.sh to compile a C program by typing:

%> module load tau %> tau_cc.sh -tau_options=-optCompInst samplecprogram.c

On machines where a TAU module is not available, you will need to set the tau makefile and/or options. The makefile and options controls how will TAU will compile you application. Use

%>tau_cc.sh -tau_makefile=[path to makefile] \

-tau_options=[option] samplecprogram.c

The Makefile can be found in the /[arch]/lib directory of your TAU distribution, for example /x86_64/lib/Makefile.tau-mpi-pdt .

You can also use a Makefile specified in an environment variable. To run tau_cc.sh so it uses the Makefile specified by environment variable TAU_MAKEFILE , type:

%>export TAU_MAKEFILE=[path to tau]/[arch]/lib/[makefile] %>export TAU_OPTIONS=-optCompInst %>tau_cc.sh sampleCprogram.c

Similarly, if you want to set compile time options like selective instrumentation you can use the TAU_OPTIONS environment variable.

2.3.3. Source Based Instrumentation

TAU provides these scripts: tau_cc.sh, tau_cxx.sh, tau_upc.sh, tau_f77.sh and tau_f90.sh to instrument and compile programs. You might use tau_cc.sh to compile a C program by typing:

%> module load tau

%> tau_cc.sh samplecprogram.c

When setting the TAU_MAKEFILE make sure the Makefile name contains

When setting the TAU_MAKEFILE make sure the Makefile name contains pdt because you will need a version of TAU built with PDT.

A list of options for the TAU compiler scripts can be found by typing man tau_compiler.sh or in this chapter of the reference guide.

2.3.4. Options to TAU compiler scripts

These are some commonly used options available to the TAU compiler scripts. Either set them via the TAU_OPTIONS environment variable or the -tau_options= option to tau_cc.sh, tau_cxx.sh, tau_upc.sh, tau_f77.sh and tau_f90.sh

-optVerbose

|

Enable verbose output (default: on) |

-optKeepFiles

|

Do not remove intermediate files |

-optShared

|

Use shared library of TAU (consider when using |

2.4. Selectively Profiling an Application

2.4.1. Custom Profiling

TAU allows you to customize the instrumentation of a program by using a selective instrumentation file. This instrumentation file is used to manually control which parts of the application are profiled and how they are profiled. If you are using one of the TAU compiler wrapper scripts to instrument your application you can use the -tau_options=-optTauSelectFile=<file> option to enable selective instrumentation.

|

Selective instrumentation may be specified at runtime by setting the TAU_SELECT_FILE environment variable to the location of a valid selective instrumentation file in the instrumented application’s run environment. |

To specify a selective instrumentation file, create a text file and use the following guide to fill it in:

-

Wildcards for routine names are specified with the

mark (because*symbols show up in routine signatures.) Themark is unfortunately the comment character as well, so to specify a leading wildcard, place the entry in quotes. -

Wildcards for file names are specified with

*symbols.

Here is a example file: #Tell TAU to not profile these functions BEGIN_EXCLUDE_LIST void quicksort(int *, int, int) # The next line excludes all functions beginning with "sort_" and having # arguments "int *" void sort_#(int *) void interchange(int *, int *) END_EXCLUDE_LIST #Exclude these files from profiling BEGIN_FILE_EXCLUDE_LIST *.so END_FILE_EXCLUDE_LIST BEGIN_INSTRUMENT_SECTION # A dynamic phase will break up the profile into phase where # each events is recorded according to what phase of the application # in which it occured. dynamic phase name="foo1_bar" file="foo.c" line=26 to line=27 # instrument all the outer loops in this routine loops file="loop_test.cpp" routine="multiply" # tracks memory allocations/deallocations as well as potential leaks memory file="foo.f90" routine="INIT" # tracks the size of read, write and print statements in this routine io file="foo.f90" routine="RINB" END_INSTRUMENT_SECTION

Selective instrumentation files can be created automatically from ParaProf by right clicking on a trial and selecting the Create Selective Instrumentation File menu item.

3. Profiling

This chapter describes running an instrumented application, generating profile data and analyzing that data. Profiling shows the summary statistics of performance metrics that characterize application performance behavior. Examples of performance metrics are the CPU time associated with a routine, the count of the secondary data cache misses associated with a group of statements, the number of times a routine executes, etc.

3.1. Running the Application

After instrumentation and compilation are completed, the profiled application is run to generate the profile data files. These files can be stored in a directory specified by the environment variable PROFILEDIR . By default, profiles are placed in the current directory. You can also set the TAU_VERBOSE enviroment variable to see the steps the TAU measurement systems takes when your application is running. Example:

% setenv TAU_VERBOSE 1 % setenv PROFILEDIR /home/sameer/profiledata/experiment55 % mpirun -np 4 matrix

Other environment variables you can set to enable these advanced MPI measurement features are TAU_TRACK_MESSAGE to track MPI message statistics when profiling or messages lines when tracing, and TAU_COMM_MATRIX to generate MPI communication matrix data.

3.2. Reducing Performance Overhead with TAU_THROTTLE

TAU automatically throttles short running functions in an effort to reduce the amount of overhead associated with profiles of such functions. This feature may be turned off by setting the environment variable TAU_THROTTLE to 0. The default rules TAU uses to determine which functions to throttle is: numcalls > 100000 && usecs/call < 10 which means that if a function executes more than 100000 times and has an inclusive time per call of less than 10 microseconds, then profiling of that function will be disabled after that threshold is reached. To change the values of numcalls and usecs/call the user may optionally set environment variables:

% setenv TAU_THROTTLE_NUMCALLS 2000000

% setenv TAU_THROTTLE_PERCALL 5

The changes the values to 2 million and 5 microseconds per call. Functions that are throttled

are marked explicitly in there names as THROTTLED.

The changes the values to 2 million and 5 microseconds per call. Functions that are throttled are marked explicitly in there names as THROTTLED.

3.3. Profiling each event callpath

You can enable callpath profiling by setting the environment variable TAU_CALLPATH . In this mode TAU will recorded the each event callpath to the depth set by the TAU_CALLPATH_DEPTH environment variable (default is two). Because instrumentation overhead will increase with the depth of the callpath, you should use the shortest call path that is sufficient.

3.4. Using Hardware Counters for Measurement

Performance counters exist on many modern microprocessors. They can count hardware performance events such as cache misses, floating point operations, etc. while the program executes on the processor. The Performance Data Standard and API (PAPI) package provides a uniform interface to access these performance counters.

To use these counters, you must first find out which PAPI events your system supports. To do so type:

%> papi_avail Available events and hardware information. ------------------------------------------------------------------------- Vendor string and code : AuthenticAMD (2) Model string and code : AMD K8 Revision C (15) CPU Revision : 2.000000 CPU Megahertz : 2592.695068 CPU's in this Node : 4 Nodes in this System : 1 Total CPU's : 4 Number Hardware Counters : 4 Max Multiplex Counters : 32 ------------------------------------------------------------------------- The following correspond to fields in the PAPI_event_info_t structure. Name Code Avail Deriv Description (Note) PAPI_L1_DCM 0x80000000 Yes Yes Level 1 data cache misses PAPI_L1_ICM 0x80000001 Yes Yes Level 1 instruction cache misses ...

Next, to test the compatibility between each metric you wish papi to profile, use papi_event_chooser:

papi/utils> papi_event_chooser PAPI_LD_INS PAPI_SR_INS PAPI_L1_DCM Test case eventChooser: Available events which can be added with given events. ------------------------------------------- Vendor string and code : GenuineIntel (1) Model string and code : Itanium 2 (2) CPU Revision : 1.000000 CPU Megahertz : 1500.000000 CPU's in this Node : 16 Nodes in this System : 1 Total CPU's : 16 Number Hardware Counters : 4 Max Multiplex Counters : 32 ------------------------------------------- Event PAPI_L1_DCM can't be counted with others

Here the event chooser tells us that PAPI_LD_INS, PAPI_SR_INS, and PAPI_L1_DCM are incompatible metrics. Let try again this time removing PAPI_L1_DCM:

% papi/utils> papi_event_chooser PAPI_LD_INS PAPI_SR_INS Test case eventChooser: Available events which can be added with given events. ------------------------------------------- Vendor string and code : GenuineIntel (1) Model string and code : Itanium 2 (2) CPU Revision : 1.000000 CPU Megahertz : 1500.000000 CPU's in this Node : 16 Nodes in this System : 1 Total CPU's : 16 Number Hardware Counters : 4 Max Multiplex Counters : 32 ------------------------------------------- Usage: eventChooser NATIVE|PRESET evt1 evet2 ...

Here the event chooser verifies that PAPI_LD_INS and PAPI_SR_INS are compatible metrics.

Next, make sure that you are using a makefile with papi in its name. Then set the environment variable TAU_METRICS to a colon delimited list of PAPI metrics you would like to use.

setenv TAU_METRICS PAPI_FP_OPS\:PAPI_L1_DCM

In addition to PAPI counters, we support TIME (via unix gettimeofday). On Linux and CrayCNL systems, we provide the high resolution LINUXTIMERS metric and on BGL/BGP systems we provide BGLTIMERS and BGPTIMERS.

4. Tracing

Typically, profiling shows the distribution of execution time across routines. It can show the code locations associated with specific bottlenecks, but it can not show the temporal aspect of performance variations. Tracing the execution of a parallel program shows when and where an event occurred, in terms of the process that executed it and the location in the source code. This chapter discusses how TAU can be used to generate event traces.

4.1. Generating Event Traces

To enable tracing with TAU, set the environment variable TAU_TRACE to 1. Similarly you can enable/disable profile with the TAU_PROFILE variable. Just like with profiling, you can set the output directory with a environment variable:

% setenv TRACEDIR /users/sameer/tracedata/experiment56

This will generate a trace file and an event file for each processor. To

merge these files, use the

This will generate a trace file and an event file for each processor. To merge these files, use the tau_treemerge.pl script. If you want to convert TAU trace file into another format use the tau2otf , tau2vtf , or tau2slog2 scripts.

5. Analyzing Parallel Applications

5.1. Text summary

For a quick view summary of TAU performance, use pprof It reads and prints a summary of the TAU data in the current directory. For performance data with multiple metrics, move into one of the directories to get information about that metric:

%> cd MULTI__P_WALL_CLOCK_TIME

%> pprof

Reading Profile files in profile.*

NODE 0;CONTEXT 0;THREAD 0:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 24 590 1 1 590963 main

95.9 26 566 1 2 566911 multiply

47.3 279 279 1 0 279280 multiply-opt

44.1 260 260 1 0 260860 multiply-regular

5.2. ParaProf

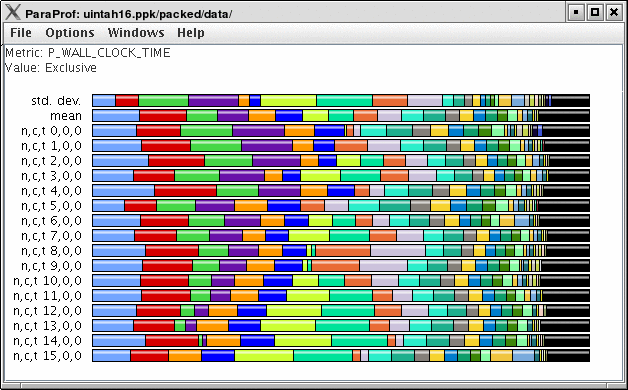

To launch ParaProf, execute paraprof from the command line where the profiles are located. Launching ParaProf will bring up the manager window and a window displaying the profile data as shown below.

For more information see the ParaProf - User’s Manual .

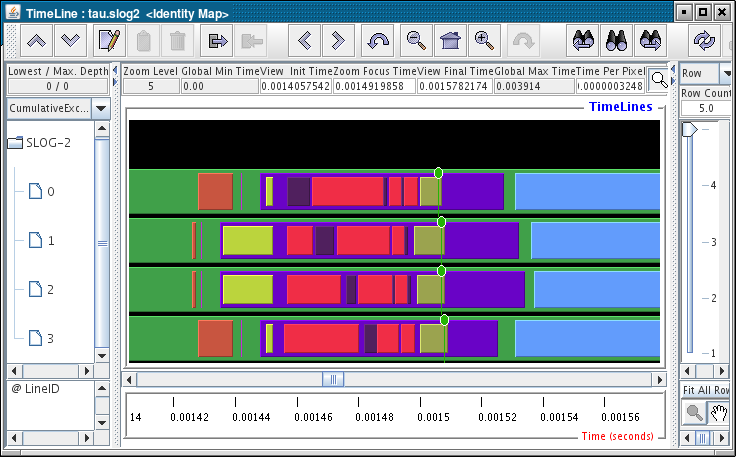

5.3. Jumpshot

To use Argonne’s Jumpshot (bundled with TAU), first merge and convert TAU traces to slog2 format:

% tau_treemerge.pl % tau2slog2 tau.trc tau.edf -o tau.slog2 % jumpshot tau.slog2 Launching Jumpshot will bring up the main display window showing the entire trace, zoom in to see more detail.

Launching Jumpshot will bring up the main display window showing the entire trace, zoom in to see more detail.

6. Quick Reference

tau_run

|

TAU’s binary instrumentation tool |

tau_cc.sh -tau_options=-optCompInst / tau_cxx.sh -tau_options=-optCompInst / tau_f90.sh -tau_options=-optCompInst / tau_upc.sh -tau_options=-optCompInst / tau_f77.sh -tau_options=-optCompInst

|

(Compiler instrumentation) |

tau_cc.sh / tau_cxx.sh / tau_f90.sh / tau_f77.sh / tau_upc.sh

|

(PDT instrumentation) |

TAU_MAKEFILE

|

Set instrumentation definition file |

TAU_OPTIONS

|

Set instrumentation options |

dynamic phase name='name' file='filename' line=start_line_# to line=end_line_#

|

Specify dynamic Phase |

loops file='filename' routine='routine name'

|

Instrument outer loops |

memory file='filename' routine='routine name'

|

Track memory |

io file='filename' routine='routine name'

|

Track IO |

TAU_PROFILE / TAU_TRACE

|

Enable profiling and/or tracing |

PROFILEDIR / TRACEDIR

|

Set profile/trace output directory |

TAU_CALLPATH=1 / TAU_CALLPATH_DEPTH

|

Enable Callpath profiling, set callpath depth |

TAU_THROTTLE=1 / TAU_THROTTLE_NUMCALLS / TAU_THROTTLE_PERCALL

|

Enable event throttling, set number of call, percall (us) threshold |

TAU_METRICS

|

List of PAPI metrics to profile |

tau_treemerge.pl

|

Merge traces to one file |

tau2otf / tau2vtf / tau2slog2

|

Trace conversion tools |

7. Some Common Application Scenarios

7.1. Q. What routines account for the most time? How much?

-

Create a flat profile with wallclock time.

Here is how to generate a flat profile with MPI

% setenv TAU_MAKEFILE /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-mpi-pdt-pgi % set path=(/opt/apps/tau/tau2/x86_64/bin $path) % make F90=tau_f90.sh (Or edit Makefile and change F90=tau_f90.sh) % qsub run.job % paraprof -–pack app.ppk Move the app.ppk file to your desktop. % paraprof app.ppk

7.2. Q. What loops account for the most time? How much?

-

Create a flat profile with wallclock time with loop instrumentation.

Here is how to instrument loops in an application

% setenv TAU_MAKEFILE /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-mpi-pdt % setenv TAU_OPTIONS ‘-optTauSelectFile=select.tau –optVerbose’ % cat select.tau BEGIN_INSTRUMENT_SECTION loops routine=“#” END_INSTRUMENT_SECTION % set path=(/opt/apps/tau/tau2/x86_64/bin $path) % make F90=tau_f90.sh (Or edit Makefile and change F90=tau_f90.sh) % qsub run.job % paraprof -–pack app.ppk Move the app.ppk file to your desktop. % paraprof app.ppk

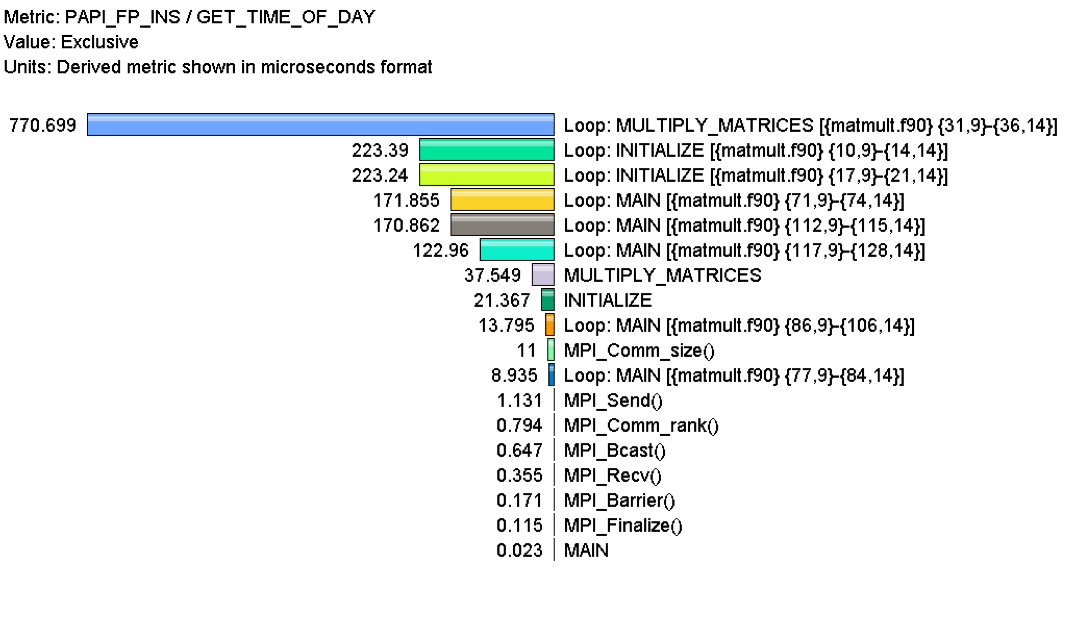

7.3. Q. What MFlops am I getting in all loops?

-

Create a flat profile with PAPI_FP_INS/OPS and time with loop instrumentation.

Here is how to generate a flat profile with FP operations

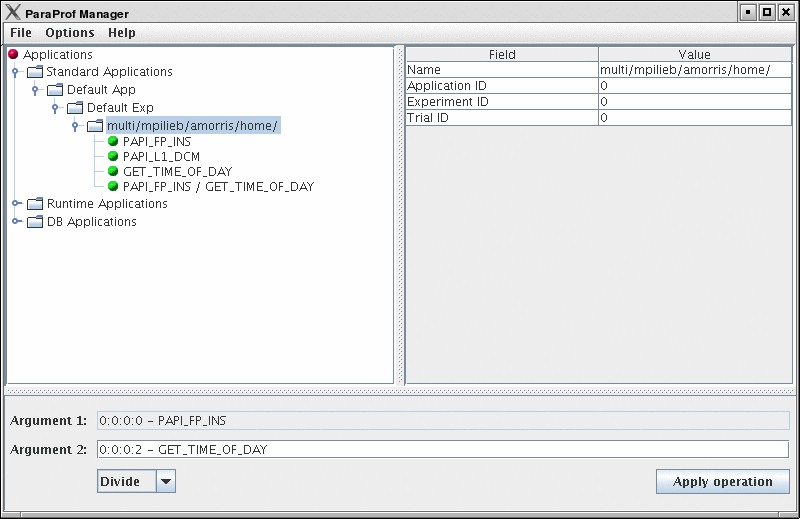

% setenv TAU_MAKEFILE /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-papi-mpi-pdt-pgi % setenv TAU_OPTIONS ‘-optTauSelectFile=select.tau –optVerbose’ % cat select.tau BEGIN_INSTRUMENT_SECTION loops routine=“#” END_INSTRUMENT_SECTION % set path=(/opt/apps/tau/tau2/x86_64/bin $path) % make F90=tau_f90.sh (Or edit Makefile and change F90=tau_f90.sh) % setenv TAU_METRICS GET_TIME_OF_DAY\:PAPI_FP_INS % qsub run.job % paraprof -–pack app.ppk Move the app.ppk file to your desktop. % paraprof app.ppk Choose 'Options' -> 'Show Derived Panel' -> Arg 1 = PAPI_FP_INS, Arg 2 = GET_TIME_OF_DAY, Operation = Divide -> Apply, close.

7.4. Q. Who calls MPI_Barrier() Where?

-

Create a callpath profile with given depth.

Here is how to generate a callpath profile with MPI

% setenv TAU_MAKEFILE % /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-mpi-pdt % set path=(/opt/apps/tau/tau2/x86_64/bin $path) % make F90=tau_f90.sh (Or edit Makefile and change F90=tau_f90.sh) % setenv TAU_CALLPATH 1 % setenv TAU_CALLPATH_DEPTH 100 % qsub run.job % paraprof -–pack app.ppk Move the app.ppk file to your desktop. % paraprof app.ppk (Windows -> Thread -> Call Graph)

7.5. Q. How do I instrument Python Code?

-

Create an python wrapper library.

Here to instrument python code

% setenv TAU_MAKEFILE /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-icpc-python-mpi-pdt

% set path=(/opt/apps/tau/tau2/x86_64/bin $path)

% setenv TAU_OPTIONS ‘-optShared -optVerbose'

(Python needs shared object based TAU library)

% make F90=tau_f90.sh CXX=tau_cxx.sh CC=tau_cc.sh (build pyMPI w/TAU)

% cat wrapper.py

import tau

def OurMain():

import App

tau.run(‘OurMain()’)

Uninstrumented:

% mpirun.lsf /pyMPI-2.4b4/bin/pyMPI ./App.py

Instrumented:

% setenv PYTHONPATH<taudir>/x86_64/<lib>/bindings-python-mpi-pdt-pgi

(same options string as TAU_MAKEFILE)

setenv LD_LIBRARY_PATH <taudir>/x86_64/lib/bindings-icpc-python-mpi-pdt-pgi\:$LD_LIBRARY_PATH

% mpirun –np 4 <dir>/pyMPI-2.4b4-TAU/bin/pyMPI ./wrapper.py

(Instrumented pyMPI with wrapper.py)

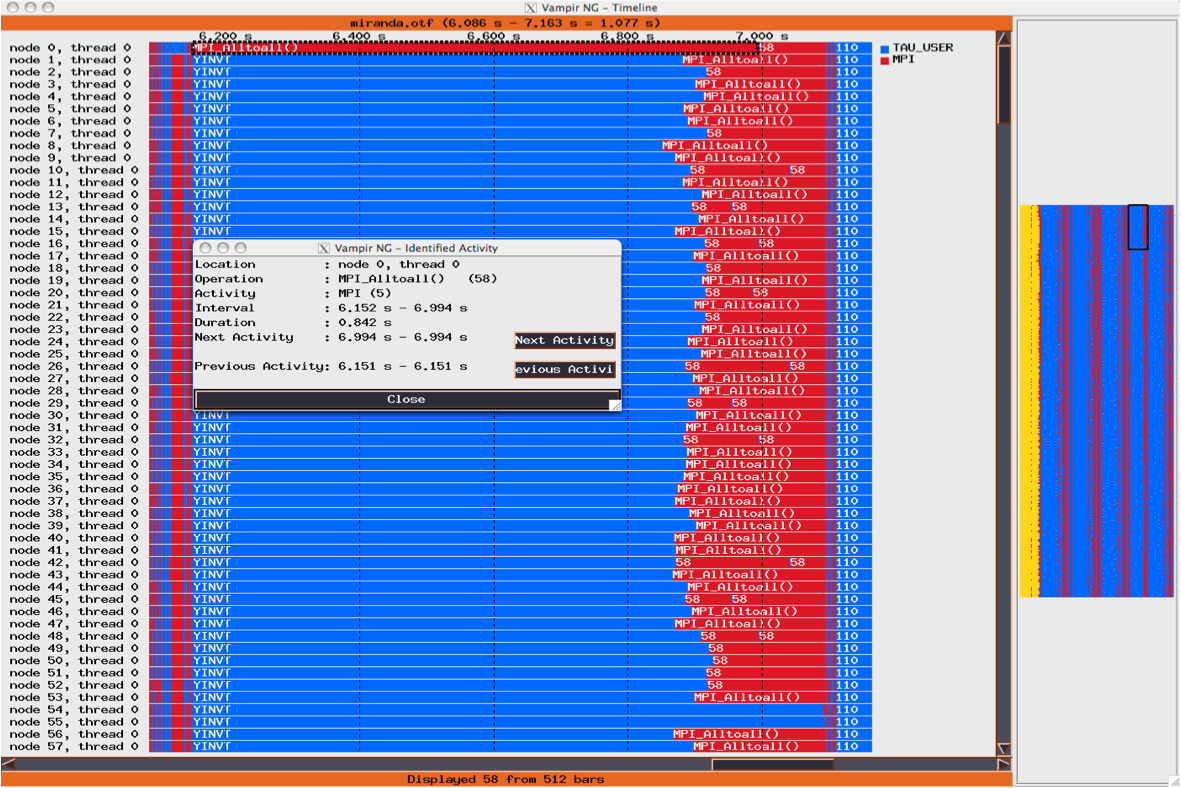

7.6. Q. What happens in my code at a given time?

-

Create an event trace.

How to create a trace

% setenv TAU_MAKEFILE % /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-mpi-pdt-pgi % set path=(/opt/apps/tau/tau2/x86_64/bin $path) % make F90=tau_f90.sh (Or edit Makefile and change F90=tau_f90.sh) % setenv TAU_TRACE 1 % qsub run.job % tau_treemerge.pl (merges binary traces to create tau.trc and tau.edf files) JUMPSHOT: % tau2slog2 tau.trc tau.edf –o app.slog2 % jumpshot app.slog2 OR VAMPIR: % tau2otf tau.trc tau.edf app.otf –n 4 –z (4 streams, compressed output trace) % vampir app.otf (or vng client with vngd server).

7.7. Q. How does my application scale?

-

Examine profiles in PerfExplorer.

How to examine a series of profiles in PerfExplorer

% setenv TAU_MAKEFILE /opt/apps/tau/tau2/x86_64/lib/Makefile.tau-mpi-pdt % set path=(/opt/apps/tau/tau2/x86_64/bin $path) % make F90=tau_f90.sh (Or edit Makefile and change F90=tau_f90.sh) % qsub run1p.job % paraprof -–pack 1p.ppk % qsub run2p.job % paraprof -–pack 2p.ppk ...and so on. On your client: % taudb_configure --create-default (taudb_configure run without any arguments will prompt for advanced options) % perfexplorer_configure (Yes to load schema, defaults) % paraprof (load each trial: Right click on trial ->Upload trial to DB % perfexplorer (Charts -> Speedup)

8. ParaProf - User’s Manual

8.1. Introduction

ParaProf is a portable, scalable performance analysis tool included with the TAU distribution.

|

ParaProf requires Oracle / Sun’s Java 1.5 Runtime Environment for basic functionality. Java JOGL (included) is required for 3d visualization and image export. Additionally, OpenGL is required for 3d visualization. |

|

Most windows in ParaProf can export bitmap (png/jpg) and vector (svg/eps) images to disk (png/jpg) or print directly to a printer. This are available through the File menu. |

8.1.1. Using ParaProf from the command line

ParaProf is a java program that is run from the supplied paraprof script ( paraprof.bat for windows binary release).

% paraprof --help

Usage: paraprof [options] <files/directory>

Options:

-f, --filetype <filetype> Specify type of performance data, options are:

profiles (default), pprof, dynaprof, mpip,

gprof, psrun, hpm, packed, cube, hpc, ompp

snap, perixml, gptl, ipm, google

--range a-b:c Load only profiles from the given range(s) of processes

Seperate individual ids or dash-defined ranges with colons

-h, --help Display this help message

The following options will run only from the console (no GUI will launch):

--merge <file.gz> Merges snapshot profiles

--pack <file> Pack the data into packed (.ppk) format

--dump Dump profile data to TAU profile format

--dumprank <rank> Dump profile data for <rank> to TAU profile format

-v, --dumpsummary Dump derived statistical data to TAU profile format

--overwrite Allow overwriting of profiles

-o, --oss Print profile data in OSS style text output

-q, --dumpmpisummary Print high level time and communication summary

-d, --metadump Print profile metadata (works with --dumpmpisummary)

-x, --suppressmetrics Exclude child calls and exclusive time from --dumpmpisummary

-s, --summary Print only summary statistics

(only applies to OSS output)

Notes:

For the TAU profiles type, you can specify either a specific set of profile

files on the commandline, or you can specify a directory (by default the current

directory). The specified directory will be searched for profile.*.*.* files,

or, in the case of multiple counters, directories named MULTI_* containing

profile data.

8.1.2. Supported Formats

ParaProf can load profile date from many sources. The types currently supported are:

TAU Profiles (profiles) - Output from the TAU measurement library, these files generally take the form of profile.X.X.X , one for each node/context/thread combination. When multiple counters are used, each metric is located in a directory prefixed with "MULTI". To launch ParaProf with all the metrics, simply launch it from the root of the MULTI directories.

ParaProf Packed Format (ppk) - Export format supported by PerfDMF/ParaProf. Typically .ppk.

TAU Merged Profiles (snap) - Merged and snapshot profile format supported by TAU. Typically tauprofile.xml.

TAU pprof (pprof) - Dump Output from TAU’s pprof -d . Provided for backward compatibility only.

DynaProf (dynaprof) - Output From DynaProf’s wallclock and papi probes.

mpiP (mpip) - Output from mpiP.

gprof (gprof) - Output from gprof, see also the --fixnames option.

PerfSuite (psrun) - Output from PerfSuite psrun files.

HPM Toolkit (hpm) - Output from IBM’s HPM Toolkit.

Cube (cube) - Output from Kojak Expert tool for use with Cube.

Cube3 (cube3) - Output from Kojak Expert tool for use with Cube3 and Cube4.

HPCToolkit (hpc) - XML data from hpcquick. Typically, the user runs hpcrun, then hpcquick on the resulting binary file.

OpenMP Profiler (ompp) - CSV format from the ompP OpenMP Profiler (http://www.ompp-tool.com). The user must use OMPP_OUTFORMAT=CVS.

PERI XML (perixml) - Output from the PERI data exchange format.

General Purpose Timing Library (gptl) - Output from the General Purpose Timing Library.

Paraver (paraver) - 2D output from the Paraver trace analysis tool from BSC.

IPM (ipm) - Integrated Performance Monitoring format, from NERSC.

Google (google) - Google Profiles.

8.1.3. Command line options

In addition to specifying the profile format, the user can also specify the following options

-

--fixnames - Use the fixnames option for gprof. When C and Fortran code are mixed, the C routines have to be mapped to either .function or function_. Strip the leading period or trailing underscore, if it is there.

-

--pack <file> - Rather than load the data and launch the GUI, pack the data into the specified file.

-

--dump - Rather than load the data and launch the GUI, dump the data to TAU Profiles. This can be used to convert supported formats to TAU Profiles.

-

--oss - Outputs profile data in OSS Style. Example:

-------------------------------------------------------------------------------

Thread: n,c,t 0,0,0

-------------------------------------------------------------------------------

excl.secs excl.% cum.% PAPI_TOT_CYC PAPI_FP_OPS calls function

0.005 56.0% 56.0% 13475345 4194518 1 foo

0.003 40.1% 96.1% 9682185 4205367 1 bar

0 3.6% 99.7% 223173 17445 1 baz

2.2E-05 0.3% 100.0% 14663 206 1 main

-

--summary - Output only summary information for OSS style output.

8.2. Views and Sub-Views

In the past, PerfDMF used a hierarchy of Applications and Experiments to organize Trials. This approach was too rigid, so in TAUdb, trials are organized by dynamic Views. Views are lists of Trials that share a given metadata value. For example, a View could contain all the Trials where the total number of threads is less than 16. Views can also have Sub-Views. For example, it might be useful to have a View of all Trials from a certain machine and then Sub-Views for each executable ran on that machine. Trials can belong to any number of VIews and Sub-Views and new Trials loaded to the database will be sorted into Views automatically.

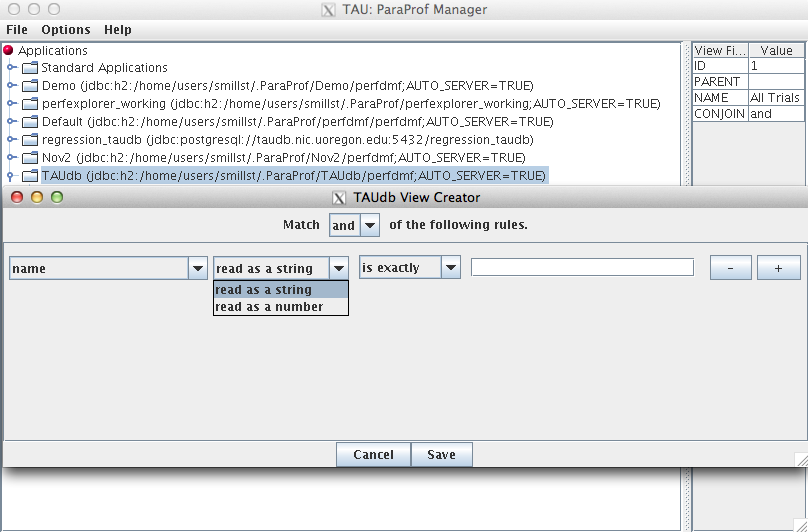

8.2.1. To Create a (Sub-)Views

Launch ParaProf and Right click on a database or an existing View and select "Add View" or "Add Sub-View."

This will launch the View Creator window.

Here you can create the rule(s) for which Trials appear in this new View. At the top you can choose to match all of the rules ("and") or to match any of the rules. The "-" or "=" buttons will remove the current rule or add a new one. The first drop down box chooses which metadata field to use. The second box chooses whether the field should be read as a string or a number. Depending on whether it is read as a string or a number, the fourth box will give options on how to compare the metadata field. So to create a View for all trials that have less than 16 threads, select total_threads, read as a string, is less than, 16. Then click Save and give the View a name.

The 'Edit' context menu option on an existing view will allow you to view and alter the view’s criteria in the same interface.

8.3. Profile Data Management

ParaProf uses PerfDMF to manage profile data. This enables it to read the various profile formats as well as store and retrieve them from a database.

8.3.1. ParaProf Manager Window

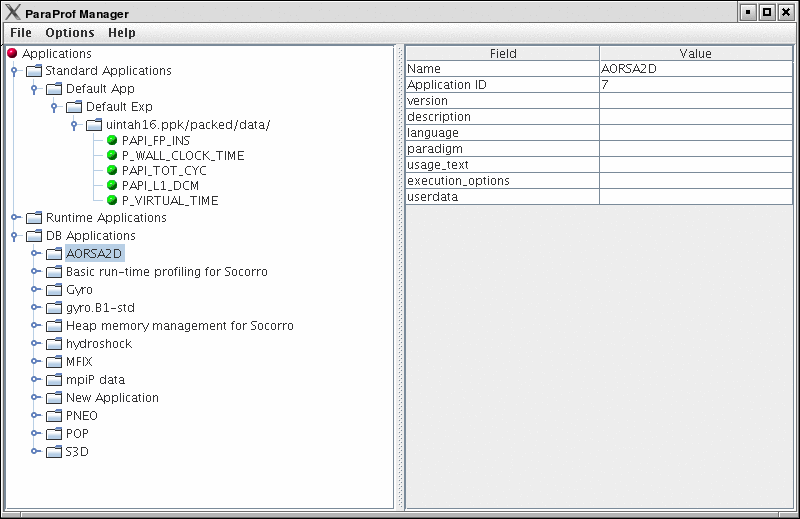

Upon launching ParaProf, the user is greeted with the ParaProf Manager Window.

This window is used to manage profile data. The user can upload/download profile data, edit meta-data, launch visual displays, export data, derive new metrics, etc.

8.3.2. Loading Profiles

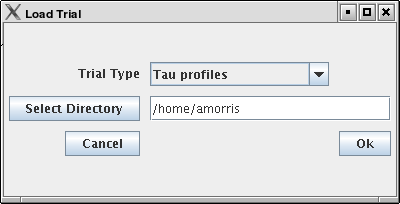

To load profile data, select File→Open, or right click on the Application’s tree and select "Add Trial".

Select the type of data from the "Trial Type" drop-down box. For TAU Profiles, select a directory, for other types, files.

8.3.3. Database Interaction

Database interaction is done through the tree view of the ParaProf Manager Window. Applications expand to Experiments, Experiments to Trials, and Trials are loaded directly into ParaProf just as if they were read off disk. Additionally, the meta-data associated with each element is show on the right, as in ParaProf Manager Window . A trial can be exported by right clicking on it and selecting "Export as Packed Profile".

New trials can be uploaded to the database by either right-clicking on an entity in the database and selecting "Add Trial", or by right-clicking on an Application/Experiment/Trial hierarchy from the "Standard Applications" and selecting "Upload Application/Experiment/Trial to DB".

8.3.4. Creating Derived Metrics

ParaProf can created derived metrics using the Derived Metric Panel , available from the Options menu of the ParaProf Manager Window.

In Creating Derived Metrics , we have just divided Floating Point Instructions by Wall-clock time, creating FLOPS (Floating Point Operations per Second). The 2nd argument is a user editable text-box and can be filled in with scalar values by using the keyword 'val' (e.g. "val 1.5").

8.3.5. Main Data Window

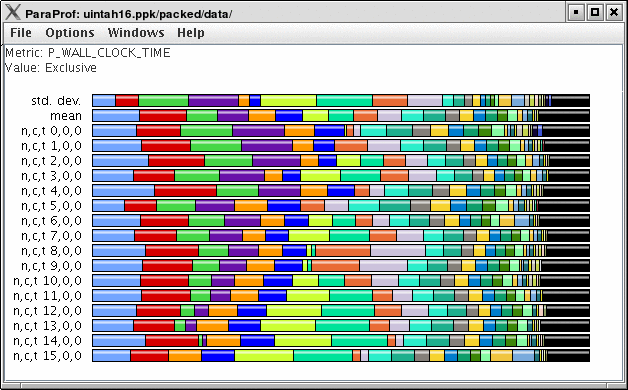

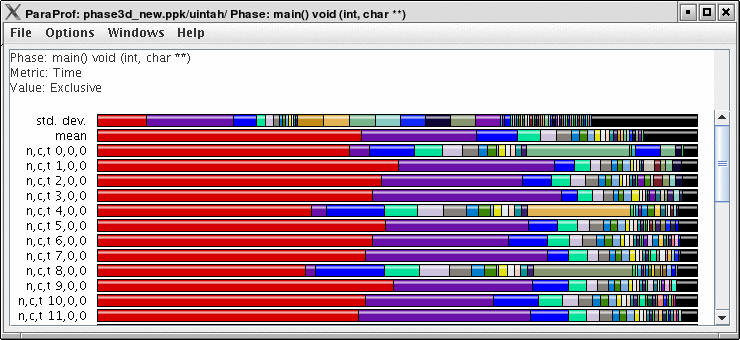

Upon loading a profile, or double-clicking on a metric, the Main Data Window will be displayed.

This window shows each thread as well as statistics as a combined bar graph. Each function is represented by a different color (though possibly cycled). From anywhere in ParaProf, you can right-click on objects representing threads or functions to launch displays associated with those objects. For example, in Main Data Window , right click on the text n,c,t, 8,0,0 to launch thread based displays for node 8.

You may also turn off the stacking of bars so that individual functions can be compared across threads in a global display.

8.4. 3-D Visualization

ParaProf displays massive parallel profiles through the use of OpenGL hardware acceleration through the 3D Visualization window. Each window is fully configurable with rotation, translation, and zooming capabilities. Rotation is accomplished by holding the left mouse button down and dragging the mouse. Translation is done likewise with the right mouse button. Zooming is done with the mousewheel and the + and - keyboard buttons.

8.4.1. Triangle Mesh Plot

This visualization method shows two metrics for all functions, all threads. The height represents one chosen metric, and the color, another. These are selected from the drop-down boxes on the right.

To pinpoint a specific value in the plot, move the Function and Thread sliders to cycle through the available functions/threads. The values for the two metrics, in this case for MPI_Recv() on Node 351 , the value is 14.37 seconds.

8.4.2. 3-D Bar Plot

This visualization method is similar to the triangle mesh plot. It simply displays the data using 3d bars instead of a mesh. The controls works the same. Note that in 3-D Mesh Plot the transparency option is selected, which changes the way in which the selection model operates.

8.4.3. 3-D Scatter Plot

This visualization method plots the value of each thread along up to 4 axes. Each axis represents a different function and metric. This view allows you to discern clustering of values and relationships between functions across threads.

Select functions using the button for each dimension, then select a metric. A single function across 4 metrics could be used, for example.

8.4.4. 3-D Topology Plot

In this visualization, you can either define the layout with a MESP topology definition file or you can fill a rectangular prism of user-defined volume with rank-points in order of rank. For more information, please see the etc/topology directory for additional details on MESP topology definitions.

If the loaded profile is a cube file or a profile from a BGB, then this visualizations groups the threads in two or three dimensional space using topology information supplied by the profile.

When topology metadata is available a trial-specific topological layout may be visualized by selecting Windows→gt;3D Visualization and selecting Topology Plot on the visualization pane.

The layout tab allows control of the layout and display of visualized cores/processes.

Minimum/Maximum Visible (restricts display of nodes with measured values above/below the selected levels). Lock Range causes the sliders to move in unison.

The X/Y/Z Axis sliders allow selection of planes, lines and individual points in the topology for examination of specific values in the display, listed in the Avg. Color Value field.

The topology selection dropdown box allows selection of either trial-specific topologies contained in the metadata, mapped topologies stored in an external file or a custom topology defined by the size of the prism containing the visualized cores. The … button allows selection of a custom topology mapping file while the map button allows selection of a map file (see <tau2>/etc/topology/README.cray_map for more information on generating map files).

If a Custom is selected the dimensions of the rectangular prism containing the cores are defined by the X/Y/Z axis control widgets.

The Events tab controls which events are used to define the color values and positions of cores/processes in the display. For trail-specific and Custom topologies only event3(Color) can be changed. For topologies loaded in MESP definition files all four events may be used in calculation of the topology layout. In either case, interval, atomic or metadata values may be used to color or position points in the display.

8.4.5. 3-D Commication Matrix

If a Trial has commication information (set TAU_COMM_MATRIX=1 at runtime of your application), then you can launch the 3D Commication window as shown.

8.5. Thread Based Displays

ParaProf displays several windows that show data for one thread of execution. In addition to per thread values, the users may also select mean or standard deviation as the "thread" to display. In this mode, the mean or standard deviation of the values across the threads will be used as the value.

8.5.1. Thread Bar Graph

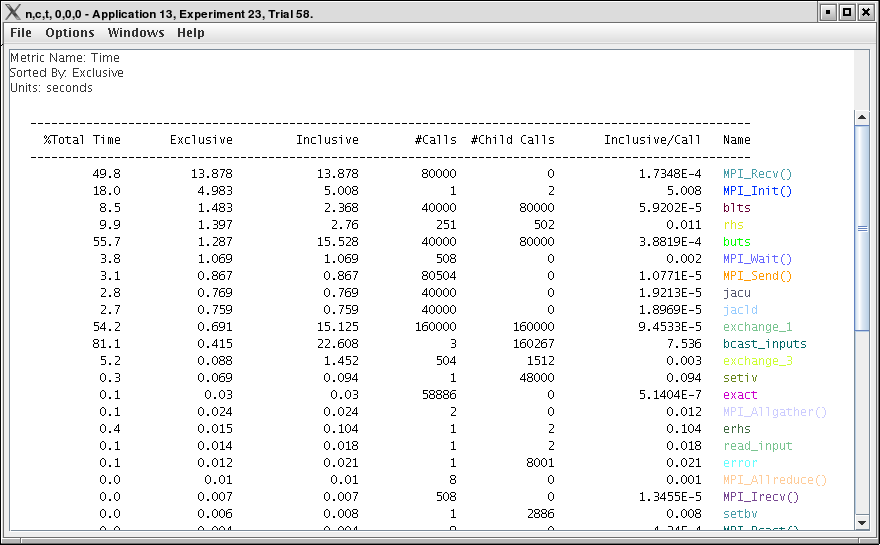

This display graphs each function on a particular thread for comparison. The metric, units, and sort order can be changed from the Options menu.

8.5.2. Thread Statistics Text Window

This display shows a pprof style text view of the data.

8.5.3. Thread Statistics Table

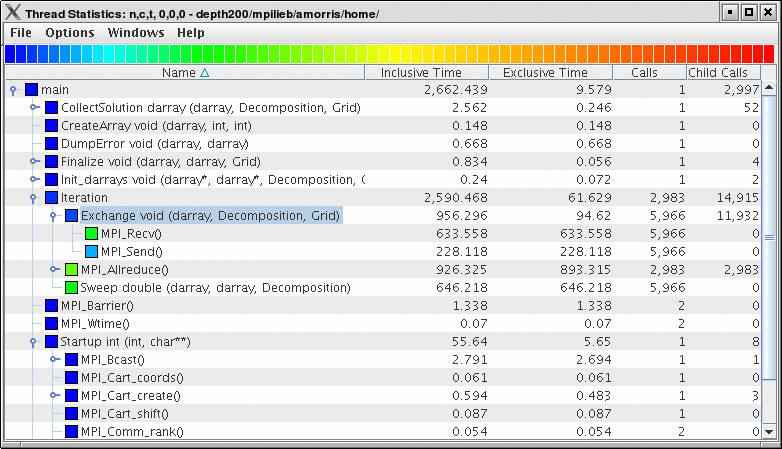

This display shows the callpath data in a table. Each callpath can be traced from root to leaf by opening each node in the tree view. A colorscale immediately draws attention to "hot spots", areas that contain highest values.

The display can be used in one of two ways, in "inclusive/exclusive" mode, both the inclusive and exclusive values are shown for each path, see Thread Statistics Table, inclusive and exclusive for an example.

When this option is off, the inclusive value for a node is show when it is closed, and the exclusive value is shown when it is open. This allows the user to more easily see where the time is spent since the total time for the application will always be represented in one column. See Thread Statistics Table and Thread Statistics Table for examples. This display also functions as a regular statistics table without callpath data. The data can be sorted by columns by clicking on the column heading. When multiple metrics are available, you can add and remove columns for the display using the menu.

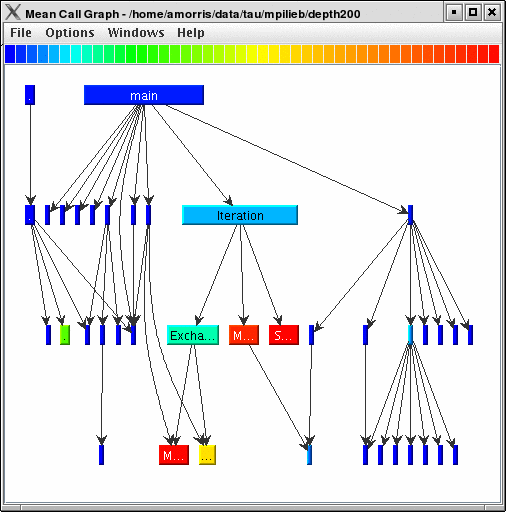

8.5.4. Call Graph Window

This display shows callpath data in a graph using two metrics, one determines the width, the other the color. The full name of the function as well as the two values (color and width) are displayed in a tooltip when hovering over a box. By clicking on a box, the actual ancestors and descendants for that function and their paths (arrows) will be highlighted with blue. This allows you to see which functions are called by which other functions since the interplay of multiple paths may obscure it.

8.5.5. Thread Call Path Relations Window

This display shows callpath data in a gprof style view. Each function is shown with its immediate parents. For example, Thread Call Path Relations Window shows that MPI_Recv() is call from two places for a total of 9.052 seconds. Most of that time comes from the 30 calls when MPI_Recv() is called by MPIScheduler::postMPIRecvs() . The other 60 calls do not amount to much time.

8.5.6. User Event Statistics Window

This display shows a pprof style text view of the user event data. Right clicking on a User Event will give you the option to open a Bar Graph for that particular User Event across all threads. See User Event Bar Graph

8.5.7. User Event Thread Bar Chart

This display shows a particular thread’s user defined event statistics as a bar chart. This is the same data from the User Event Statistics Window , in graphical form.

8.6. Function Based Displays

ParaProf has two displays for showing a single function across all threads of execution. This chapter describes the Function Bar Graph Window and the Function Histogram Window.

8.6.1. Function Bar Graph

This display graphs the values that the particular function had for each thread along with the mean and standard deviation across the threads. You may also change the units and metric displayed from the Options menu.

8.6.2. Function Histogram

This display shows a histogram of each thread’s value for the given function. Hover the mouse over a given bar to see the range minimum and maximum and how many threads fell into that range. You may also change the units and metric displayed from the Options menu.

You may also dynamically change how many bins are used (1-100) in the histogram. This option is available from the Options menu. Changing the number of bins can dramatically change the shape of the histogram, play around with it to get a feel for the true distribution of the data.

8.7. Phase Based Displays

When a profile contains phase data, ParaProf will automatically run in phase mode. Most displays will show data for a particular phase. This phase will be displayed in teh top left corner in the meta data panel.

8.7.1. Using Phase Based Displays

The initial window will default to top level phase, usually main

To access other phases, either right click on the phase and select, "Open Profile for this Phase", or go to the Phase Ledger and select it there.

ParaProf can also display a particular function’s value across all of the phases. To do so, right click on a function and select, "Show Function Data over Phases".

Because Phase information is implemented as callpaths, many of the callpath displays will show phase data as well. For example, the Call Path Text Window is useful for showing how functions behave across phases.

8.8. Comparative Analysis

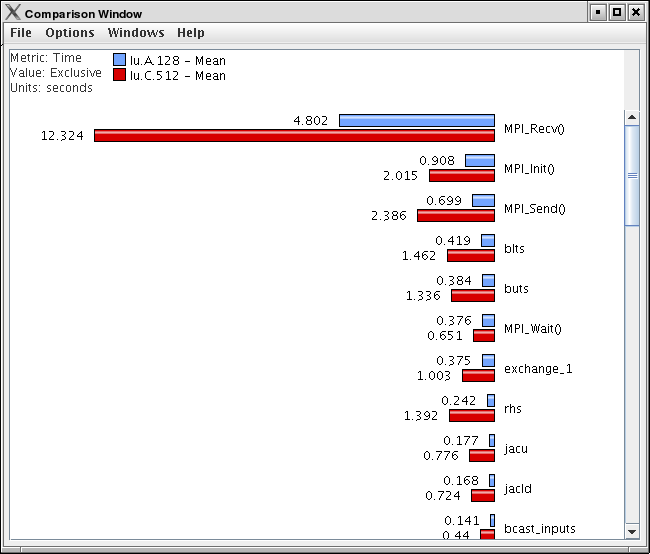

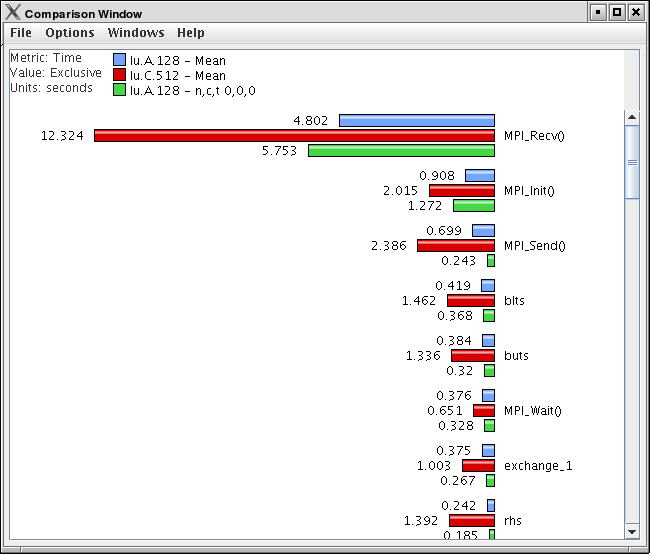

ParaProf can perform cross-thread and cross-trial anaylsis. In this way, you can compare two or more trials and/or threads in a single display.

8.8.1. Using Comparitive Analysis

Comparative analysis in ParaProf is based on individual threads of execution. There is a maximum of one Comparison window for a given ParaProf session. To add threads to the window, right click on them and select "Add Thread to Comparison Window". The Comparison Window will pop up with the thread selected. Note that "mean" and "std. dev." are considered threads for this any most other purposes.

Add additional threads, from any trial, by the same means.

8.9. Miscellaneous Displays

8.9.1. User Event Bar Graph

In addition to displaying the text statistics for User Defined Events, ParaProf can also graph a particular User Event across all threads.

This display graphs the value that the particular user event had for each thread.

8.9.2. Ledgers

ParaProf has three ledgers that show the functions, groups, and user events.

Function Ledger

The function ledger shows each function along with its current color. As with other displays showing functions, you may right-click on a function to launch other function-specific displays.

Group Ledger

The group ledger shows each group along with its current color. This ledger is especially important because it gives you the ability to mask all of the other displays based on group membership. For example, you can right-click on the MPI group and select "Show This Group Only" and all of the windows will now mask to only those functions which are members of the MPI group. You may also mask by the inverse by selecting "Show All Groups Except This One" to mask out a particular group.

User Event Ledger

The user event ledger shows each user event along with its current color.

8.9.3. Selective Instrumentation File Generator

ParaProf can also help you refine your program performance by excluding some functions from instrumentation. You can select rules to determine which function get excluded; both rules must be true for a given function to be excluded. Below each function that will be excluded based on these rules are listed.

|

Only the functions profilied in ParaProf can be excluded. If you had previously setup selective instrumentation for this application the functions that where previously excluded will not longer be excluded. |

8.10. Preferences

Preferences are modified from the ParaProf Preferences Window, launched from the File menu. Preferences are saved between sessions in the .ParaProf/ParaProf.prefs

8.10.1. Preferences Window

In addition to displaying the text statistics for User Defined Events, ParaProf can also graph a particular User Event across all threads.

The preferences window allows the user to modify the behavior and display style of ParaProf’s windows. The font size affects bar height, a sample display is shown in the upper-right.

The Window defaults section will determine the initial settings for new windows. You may change the initial units selection and whether you want values displayed as percentages or as raw values.

The Settings section controls the following

-

Show Path Title in Reverse - Path title will normally be shown in normal order (/home/amorris/data/etc). They can be reverse using this option (etc/data/amorris/home). This only affects loaded trials and the titlebars of new windows.

-

Reverse Call Paths - This option will immediately change the display of all callpath functions between

Root ⇒ LeafandLeaf ⇐ Root. -

Statistics Computation - Turning this option on causes the mean computation to take the sum of value for a function across all threads and divide it by the total number of threads. With this option off the sum will only be divided by the number of threads that actively participated in the sum. This way the user can control whether or not threads which do not call a particular function are consider as a

0in the computation of statistics. -

Generate Reverse Calltree Data - This option will enable the generation of reverse callpath data necessary for the reverse callpath option of the statistics tree-table window.

-

Show Source Locations - This option will enable the display of source code locations in event names.

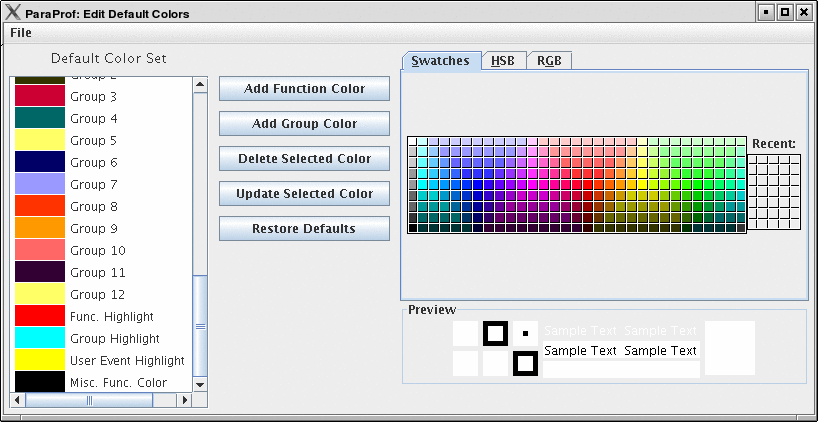

8.10.2. Default Colors

The default color editor changes how colors are distributed to functions whose color has not been specifically assigned. It is accessible from the File menu of the Preferences Window.

8.10.3. Color Map

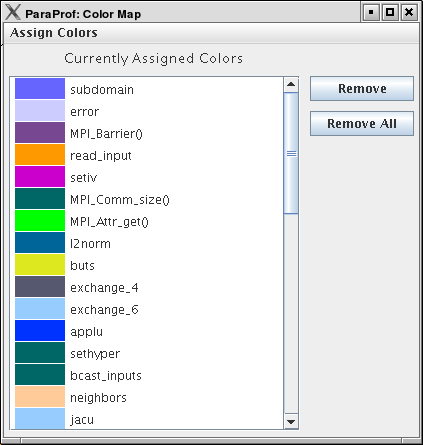

The color map shows specifically assigned colors. These values are used across all trials loaded so that the user can identify a particular function across multiple trials. In order to map an entire trial’s function set, Select "Assign Defaults from →" and select a loaded trial.

Individual functions can be assigned a particular color by clicking on them in any of the other ParaProf Windows.

9. PerfExplorer - User’s Manual

9.1. Introduction

PerfExplorer is a framework for parallel performance data mining and knowledge discovery. The framework architecture enables the development and integration of data mining operations that will be applied to large-scale parallel performance profiles.

The overall goal of the PerfExplorer project is to create a software to integrate sophisticated data mining techniques in the analysis of large-scale parallel performance data.

PerfExplorer supports clustering, summarization, association, regression, and correlation. Cluster analysis is the process of organizing data points into logically similar groupings, called clusters. Summarization is the process of describing the similarities within, and dissimilarities between, the discovered clusters. Association is the process of finding relationships in the data. One such method of association is regression analysis, the process of finding independent and dependent correlated variables in the data. In addition, comparative analysis extends these operations to compare results from different experiments, for instance, as part of a parametric study.

In addition to the data mining operations available, the user may optionally choose to perform comparative analysis. The types of charts available include time-steps per second, relative efficiency and speedup of the entire application, relative efficiency and speedup of one event, relative efficiency and speedup for all events, relative efficiency and speedup for all phases and runtime breakdown of the application by event or by phase. In addition, when the events are grouped together, such as in the case of communication routines, yet another chart shows the percentage of total runtime spent in that group of events. These analyses can be conducted across different combinations of parallel profiles and across phases within an execution.

9.2. Installation and Configuration

PerfExplorer uses TAUdb and PerfDMF databases so if you have not already you will need to install TAUdb, see Introduction . After installing and configuring TAU the perfexplorer executable should be available in your [path to tau]/tau2/[arch]/bin directory. You will need to run perfexplorer_configure , installed at the same location as perfexplorer, to set up your database for use with perfexplorer and to download additional 3rd party jar files perfexplorer requires. When prompted by perfexplorer_configure give the name of your PerfDMF or TAUdb database and press Y to agree to download the jar files.

9.3. Running PerfExplorer

To run PerfExplorer type:

%>perfexplorer

When PerfExplorer loads you will see on the left window all the

experiments that where loaded into PerfDMF. You can select which

performance data you are interested by navigating the tree structure.

PerfExplorer will allow you to

run analysis operations on these experiments. Also the cluster analysis

results are visible on the right side of the window. Various types of

comparative analysis are available from the drop down menu

selected.

When PerfExplorer loads you will see on the left window all the experiments that where loaded into PerfDMF. You can select which performance data you are interested by navigating the tree structure. PerfExplorer will allow you to run analysis operations on these experiments. Also the cluster analysis results are visible on the right side of the window. Various types of comparative analysis are available from the drop down menu selected.

To run an analysis operation, first select the metric of interest form the experiments on the left. Then perform the operation by selecting it from the Analysis menu. If you would like you can set the clustering method, dimension reduction, normalization method and the number of clusters from the same menu.

The options under the Charts menu provide analysis over one or more applications, experiments, views or trials. To view these charts first choose a metric of interest by selecting a trial form the tree on the left. Then optionally choose the Set Metric of Interest or Set Event of Interest form the Charts menu (if you don’t, and you need to, you will be prompted). Now you can view a chart by selecting it from the Charts menu.

9.4. Cluster Analysis

Cluster analysis is a valuable tool for reducing large parallel profiles down to representative groups for investigation. Currently, there are two types of clustering analysis implemented in PerfExplorer. Both hierarchical and k-means analysis are used to group parallel profiles into common clusters, and then the clusters are summarized. Initially, we used similarity measures computed on a single parallel profile as input to the clustering algorithms, although other forms of input are possible. Here, the performance data is organized into multi-dimensional vectors for analysis. Each vector represents one parallel thread (or process) of execution in the profile. Each dimension in the vector represents an event that was profiled in the application. Events can be any sub-region of code, including libraries, functions, loops, basic blocks or even individual lines of code. In simple clustering examples, each vector represents only one metric of measurement. For our purposes, some dissimilarity value, such as Euclidean or Manhattan distance, is computed on the vectors. As discussed later, we have tested hierarchical and $k$-means cluster analysis in PerfExplorer on profiles with over 32K threads of execution with few difficulties.

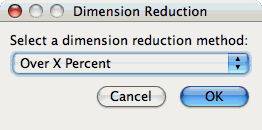

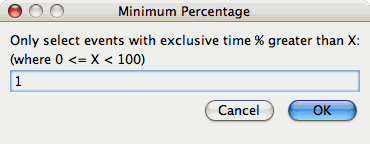

9.4.1. Dimension Reduction

Often, many hundreds of events are instrumented when profile data is collected. Clustering works best with dimensions less than 10, so dimension reduction is often necessary to get meaningful results. Currently, there is only one type of dimension reduction available in PerfExplorer. To reduce dimensions, the user specifies a minimum exclusive percentage for an event to be considered "significant".

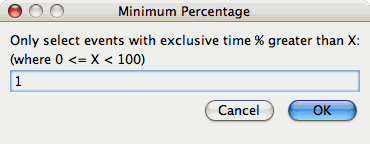

To reduce dimensions, select the "Select Dimension Reduction" item under the "Analysis" main menu bar item. The following dialog will appear:

Select "Over X Percent". The following dialog will appear:

Enter a value, for example "1".

9.4.2. Max Number of Clusters

By default, PerfExplorer will attempt k-means clustering with values of k from 2 to 10. To change the maximum number of clusters, select the "Set Maximum Number of Clusters" item under the "Analysis" main menu item. The following dialog will appear:

9.4.3. Performing Cluster Analysis

To perform cluster analysis, you first need to select a metric. To select a metric, navigate through the tree of applications, experiments and trials, and expand the trial of interest, showing the available metrics, as shown in the figure below:

Metric to Cluster image::clusteringselection.png[Selecting a Metric to Cluster,width="6in",align="center"]

After selecting the metric of interest, select the "Do Clustering" item under the "Analysis" main menu bar item. The following dialog will appear:

Clustering Options image::confirmclustering.png[Confirm Clustering Options,width="2in",align="center"]

After confirming the clustering, the clustering will begin. When the clustering results are available, you can view them in the "Cluster Results" tab.

Results image::clusterresults.png[Cluster Results,width="6in",align="center"]

There are a number of images in the "Cluster Results" window. From left to right, the windows indicate the cluster membership histogram, a PCA scatterplot showing the cluster memberships, a virtual topology of the parallel machine, the minimum values for each event in each cluster, the average values for each event in each cluster, and the maximum values for each event in each cluster. Clicking on a thumbnail image in the main window will bring up the images, as shown below:

Membership Histogram image::histogram.png[Cluster Membership Histogram,width="4in",align="center"]

Membership Scatterplot image::scatterplot.png[Cluster Membership Scatterplot,width="4in",align="center"]

Virtual Topology image::topology.png[Cluster Virtual Topology,width="4in",align="center"]

Average Behavior image::averages.png[Cluster Average Behavior,width="4in",align="center"]

9.5. Correlation Analysis

Correlation analysis in PerfExplorer is used to explore relationships between events in a profile. Each event is pairwise plotted with the other events, and a correlation coefficient is calcuated for the relationship. When the events are highly positively correlated (coefficient of close to 1.0) or highly negatively correlated (coefficient close to -1.0), then the relationships will show up as linear groupings in the results. Clusters may also be apparent.

9.5.1. Dimension Reduction

Often, many hundreds of events are instrumented when profile data is collected. Clustering works best with dimensions less than 10, so dimension reduction is often necessary to get meaningful results. Currently, there is only one type of dimension reduction available in PerfExplorer. To reduce dimensions, the user specifies a minimum exclusive percentage for an event to be considered "significant".

To reduce dimensions, select the "Select Dimension Reduction" item under the "Analysis" main menu bar item. The following dialog will appear:

Select "Over X Percent". The following dialog will appear:

Enter a value, for example "1".

9.5.2. Performing Correlation Analysis

To perform correlation analysis, you first need to select a metric. To select a metric, navigate through the tree of applications, experiments and trials, and expand the trial of interest, showing the available metrics, as shown in the figure below:

Metric to Cluster image::clusteringselection.png[Selecting a Metric to Cluster,width="6in",align="center"]

After selecting the metric of interest, select the "Do Correlation Analysis" item under the "Analysis" main menu bar item. A confirmation dialog will appear, and you can either confirm the correlation request or cancel it. After confirming the correlation, the analysis will begin. When the analysis results are available, you can view them in the "Correlation Results" tab.

Results image::correlationresults.png[Correlation Results,width="6in",align="center"]

There are a number of images in the "Correlation Results" window. Each thumbnail represents a pairwise correlation plot of two events. Clicking on a thumbnail image in the main window will bring up the images, as shown below:

Example image::correlationexample.png[Correlation Example,width="6in",align="center"]

9.6. Charts

9.6.1. Setting Parameters

There are a few parameters which need to be set when doing comparisons between trials in the database. If any necessary setting is not configured before requesting a chart, you will be prompted to set the value. The following settings may be necessary for the various charts available:

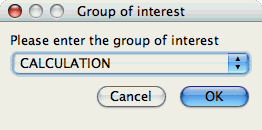

Group of Interest

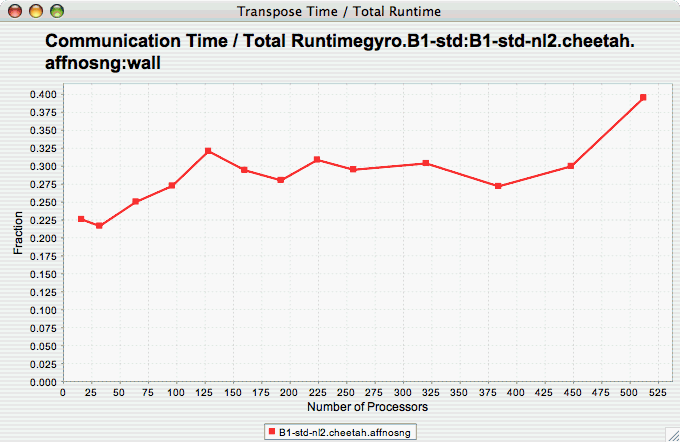

TAU events are often associated with common groups, such as "MPI", "TRANSPOSE", etc. This value is used for showing what fraction of runtime that this group of events contributed to the total runtime.

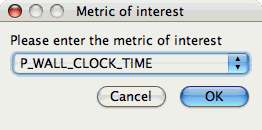

Metric of Interest

Profiles may contain many metrics gathered for a single trial. This selects which of the available metrics the user is interested in.

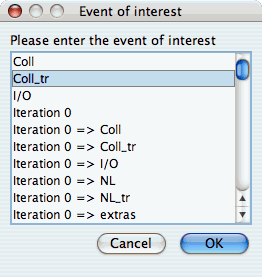

Event of Interest

Some charts examine events in isolation. This setting configures which event to examine.

Total Number of

Timesteps

One chart, the "Timesteps per second" chart, will calculate the number of timesteps completed per second. This setting configures that value.

9.6.2. Standard Chart Types

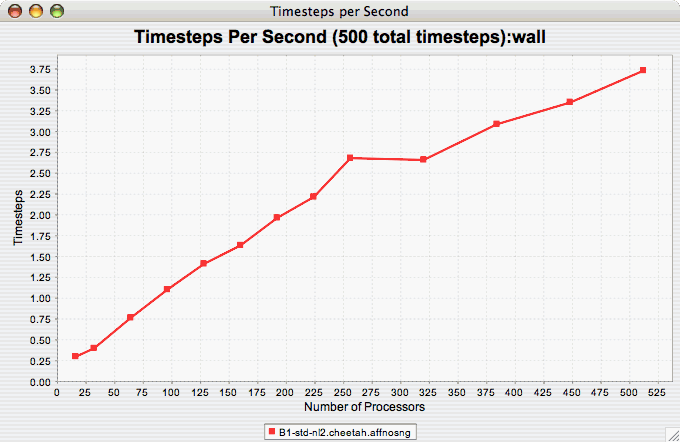

Timesteps Per

Second

The Timesteps Per Second chart shows how an application scales as it relates to time-to-solution. If the timesteps are not already set, you will be prompted to enter the total number of timesteps in the trial (see Total Number of ). If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one or more experiments or one view, and select this chart item under the "Charts" main menu item.

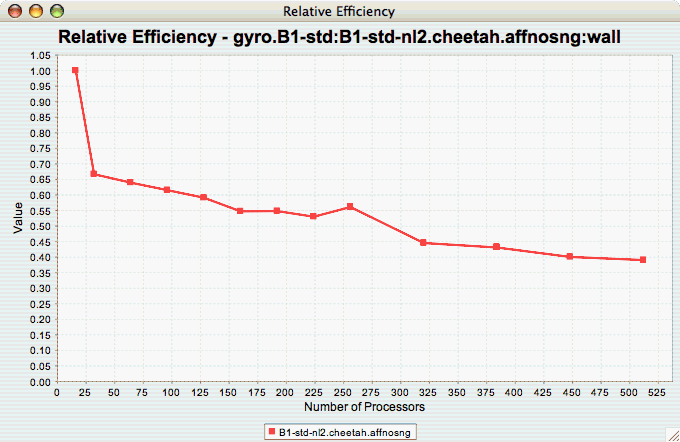

Relative

Efficiency

The Relative Efficiency chart shows how an application scales with respect to relative efficiency. That is, as the number of processors increases by a factor, the time to solution is expected to decrease by the same factor (with ideal scaling). The fraction between the expected scaling and the actual scaling is the relative efficiency. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one experiment or view, and select this chart item under the "Charts" main menu item.

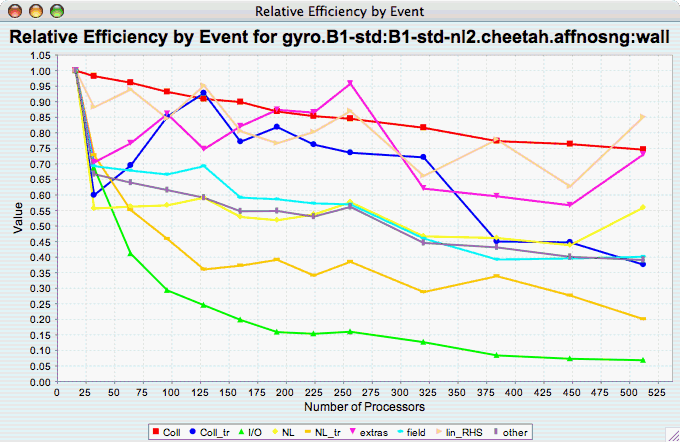

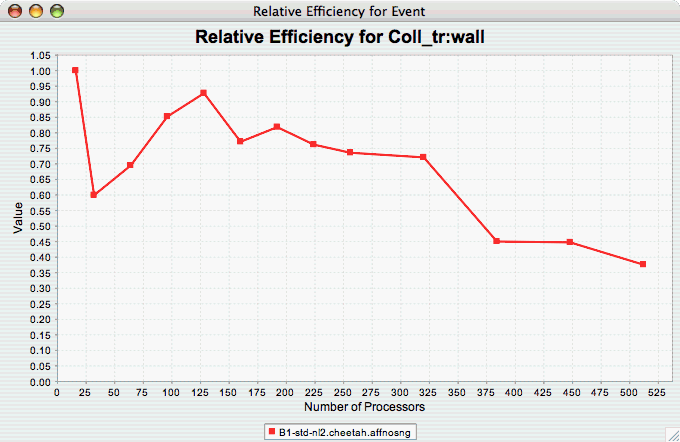

Relative Efficiency by

Event

The Relative Efficiency By Event chart shows how each event in an application scales with respect to relative efficiency. That is, as the number of processors increases by a factor, the time to solution is expected to decrease by the same factor (with ideal scaling). The fraction between the expected scaling and the actual scaling is the relative efficiency. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one or more experiments or one view, and select this chart item under the "Charts" main menu item.

Relative Efficiency for

One Event

The Relative Efficiency for One Event chart shows how one event from an application scales with respect to relative efficiency. That is, as the number of processors increases by a factor, the time to solution is expected to decrease by the same factor (with ideal scaling). The fraction between the expected scaling and the actual scaling is the relative efficiency. If there is more than one event to choose from, and you have not yet selected an event of interest, you may be prompted to select the event of interest (see Event of Interest ). If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one or more experiments or one view, and select this chart item under the "Charts" main menu item.

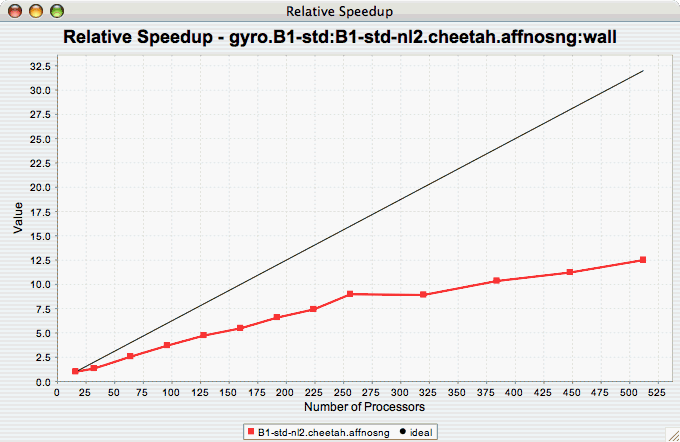

Relative

Speedup

The Relative Speedup chart shows how an application scales with respect to relative speedup. That is, as the number of processors increases by a factor, the speedup is expected to increase by the same factor (with ideal scaling). The ideal speedup is charted, along with the actual speedup for the application. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one or more experiments or one view, and select this chart item under the "Charts" main menu item.

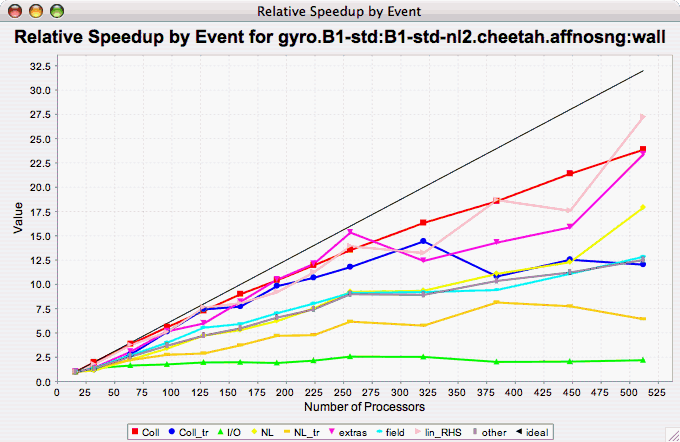

Relative Speedup by

Event

The Relative Speedup By Event chart shows how the events in an application scale with respect to relative speedup. That is, as the number of processors increases by a factor, the speedup is expected to increase by the same factor (with ideal scaling). The ideal speedup is charted, along with the actual speedup for the application. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one experiment or view, and select this chart item under the "Charts" main menu item.

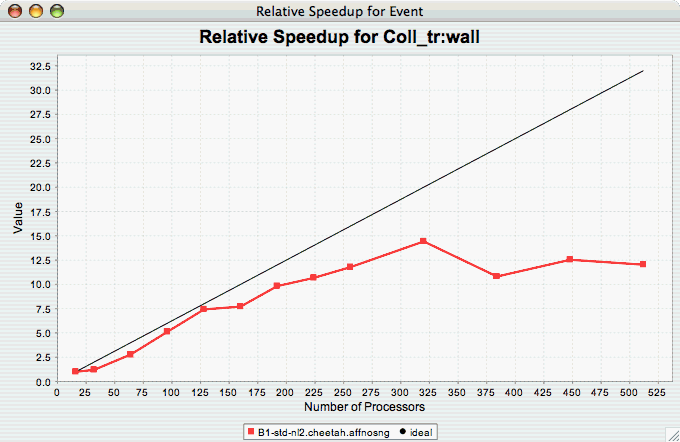

Relative Speedup for One

Event

The Relative Speedup for One Event chart shows how one event in an application scales with respect to relative speedup. That is, as the number of processors increases by a factor, the speedup is expected to increase by the same factor (with ideal scaling). The ideal speedup is charted, along with the actual speedup for the application. If there is more than one event to choose from, and you have not yet selected an event of interest, you may be prompted to select the event of interest (see Event of Interest ). If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one or more experiments or one view, and select this chart item under the "Charts" main menu item.

Group % of Total

Runtime

The Group % of Total Runtime chart shows how the fraction of the total runtime for one group of events changes as the number of processors increases. If there is more than one group to choose from, and you have not yet selected a group of interest, you may be prompted to select the group of interest (see Group of Interest ). If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one or more experiments or one view, and select this chart item under the "Charts" main menu item.

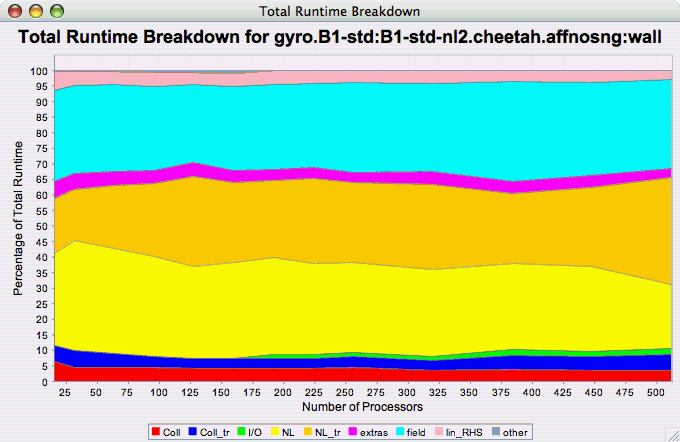

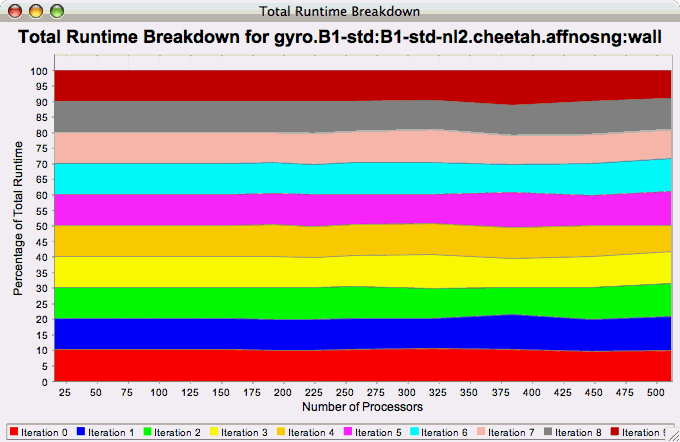

Runtime

Breakdown

The Runtime Breakdown chart shows the fraction of the total runtime for all events in the application, and how the fraction changes as the number of processors increases. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one experiment or view, and select this chart item under the "Charts" main menu item.

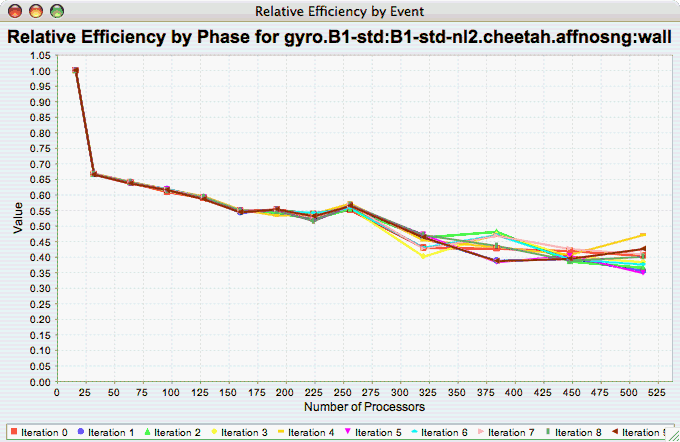

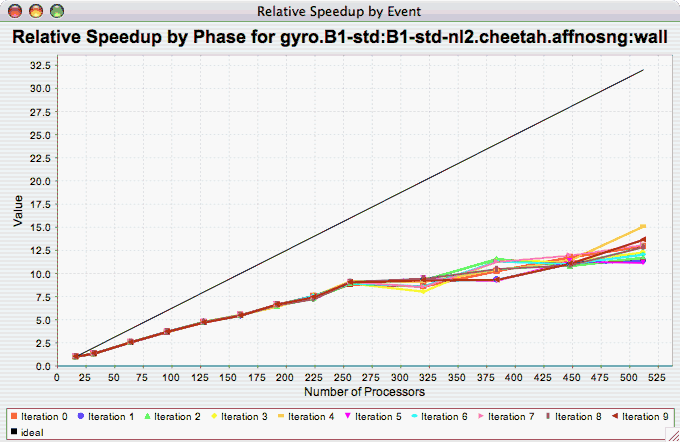

9.6.3. Phase Chart Types

TAU now provides the ability to break down profiles with respect to phases of execution. One such application would be to collect separate statistics for each timestep, or group of timesteps. In order to visualize the variance between the phases of execution, a number of phase-based charts are available.

Relative Efficiency per Phase

The Relative Efficiency Per Phase chart shows the relative efficiency for each phase, as the number of processors increases. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one experiment or view, and select this chart item under the "Charts" main menu item.

Relative Speedup per Phase

The Relative Speedup Per Phase chart shows the relative speedup for each phase, as the number of processors increases. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one experiment or view, and select this chart item under the "Charts" main menu item.

Phase Fraction of Total

Runtime

The Phase Fraction of Total Runtime chart shows the breakdown of the execution by phases, and shows how that breakdown changes as the number of processors increases. If there is more than one metric to choose from, you may be prompted to select the metric of interest (see Metric of Interest ). To request this chart, select one experiment or view, and select this chart item under the "Charts" main menu item.

9.7. Custom Charts

In addition to the default charts available in the charts menu, there are is a custom chart interface. To access the interface, select the "Custom Charts" tab on in the results pane of the main window, as shown:

Interface image::customcharts.png[The Custom Charts Interface,width="6in",align="center"]

There are a number of controls for the cusotom charts. They are:

-

Main Only - When selected, only the main event (the event with the highest inclusive value) will be selected. When deselected, the "Events" control (see below) is activated, and one or all events can be selected.

-

Call Paths - When selected, callpath events will be available in the "Events" control (see below).

-

Log Y - When selected, the Y axis will be the log of the true value.

-

Scalability - When selected, the chart will be interpreted as a speedup chart. The trial with the fewest number of threads of execution will be considered the baseline trial.

-

Efficiency - When selected, the chart will be interpreted as a relative efficiency chart. The trial with the fewest number of threads of execution will be considered the baseline trial.

-

Strong Scaling - When deselected, the speedup or efficiency chart will be interpreted as a strong scaling study (the workload is the same for all trials). When selected, the button will change to "Weak Scaling", and the chart will be interpreted as a weak scaling study (the workload is proportional to the total number of threads in each trial).

-

Horizontal - when selected, the chart X and Y axes will be swapped.

-

Show Y-Axis Zero - when selected, the chart will include the value 0. When deselected, the chart will only show the relevant values for all data points.

-

Chart Title - value to use for the chart title

-

Series Name/Value - the field to be used to group the data points as a series.

-

X Axis Value - the field to use as the X axis value.

-

X Axis Name - the name to put in the chart for the value along the X axis.

-

Y Axis Value - the field to use as the Y axis value

-

Y Axis Name - the name to put in the chart for the value along the X axis.

-

Dimension Reduction - whether or not to use dimension reduction. This is only applicable when "Main Only" is disabled.

-

Cutoff - when the "Dimension Reduction" is enabled, the cutoff value for selecting "All Events".

-

Metric - The metric of interest for the Y axis.

-

Units - The unit to be selected for the Y axis.

-

Event - The event of interest, or "All Events".

-

XML Field - When the X or Y axis is selected to be an XML field, this is the field of interest.

-

Apply - build the chart.

-

Reset - restore the options back to the default values.

When the chart is generated, it can be saved as a vector image by selecting "File → Save As Vector Image". The chart can also be saved as a PNG by right clicking on the chart, and selecting "Save As…".

9.8. Visualization

Under the "Visualization" main menu item, there are five types of raw data visualization. The five items are "3D Visualization", "Data Summary", "Create Boxchart", "Create Histogram" and "Create Normal Probability Chart". For the Boxchart, Histogram and Normal Probability Charts, you can either select one metric in the trial (which selects all events by default), or expand the metric and select events of interest.

9.8.1. 3D Visualization

When the "3D Visualization" is requested, PerfExplorer examines the data to try to determine the most interesting events in the trial. That is, for the selected metric in the selected trial, the database will calculate the weighted relative variance for each event across all threads of execution, in order to find the top four "significant" events. These events are selected by calculating: stddev(exclusive) / (max(exclusive) - min(exclusive)) * exclusive_percentage. After selecting the top four events, they are graphed in an OpenGL window.

Visualization of multivariate data image::3dvisualization.png[3D Visualization of multivariate data,width="6in",align="center"]

9.8.2. Data Summary

In order to see a summary of the performance data in the database, select the "Show Data Summary" item under the "Visualization" main menu item.

Summary Window image::datasummary.png[Data Summary Window,width="6in",align="center"]

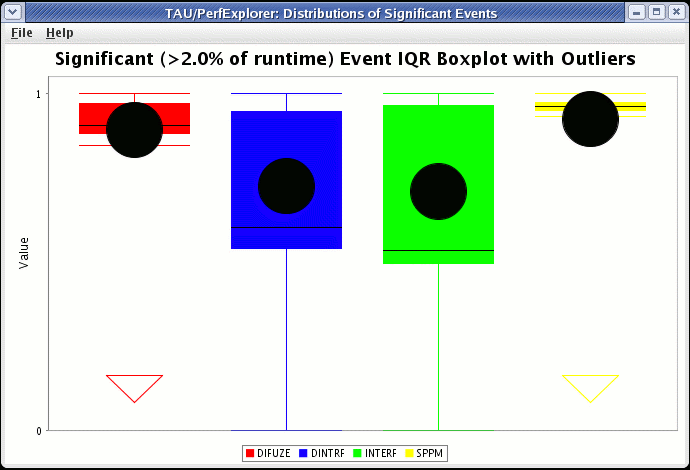

9.8.3. Creating a Boxchart

In order to see a boxchart summary of the performance data in the database, select the "Create Boxchart" item under the "Visualization" main menu item.

9.8.4. Creating a Histogram

In order to see a histogram summary of the performance data in the database, select the "Create Histogram" item under the "Visualization" main menu item.

9.8.5. Creating a Normal Probability Chart

In order to see a normal probability summary of the performance data in the database, select the "Create NormalProbability" item under the "Visualization" main menu item.

Probability image::normalprobability.png[Normal Probability,width="6in",align="center"]

9.9. Views

Often times, data is loaded into the database with multiple parametric cross-sections. For example, the charts available in PerfExplorer are primarily designed for scalability analysis, however data might be loaded as a parametric study. For example, in the following example, the data has been loaded with three problem sizes, MIN, HALF and FULL.

scalability data organized as a parametric study image::parametricdata.png[Potential scalability data organized as a parametric study,width="6in",align="center"]

In order to examine this data in a scalability study, it is necessary to reorganize the data. However, it is not necessary to re-load the data. Using views in PerfExplorer, you can re-organize the data based on values in the database.

9.9.1. Creating Views

To create a view, select the "Create New View" item under the "Views" main menu item. The first step is to select the table which will form the basis of the view. The three possible values are Application, Experiment and Trial:

After selecting the table, you need to select the column on which to filter:

column image::viewscolumn.png[Selecting a column,width="2in",align="center"]

After selecting the column, you need to select the operator for comparing to that column:

operator image::viewsoperator.png[Selecting an operator,width="2in",align="center"]

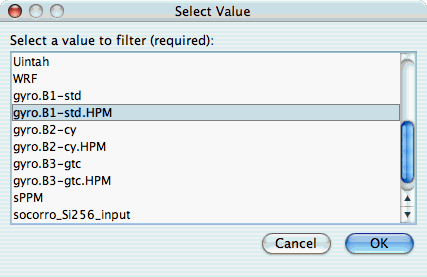

After selecting the operator, you need to select the value for comparing to the column:

After selecting the value, you need to select a name for the view:

for the view image::viewsname.png[Entering a name for the view,width="2in",align="center"]

After creating the view, you will need to exit PerfExplorer and re-start it to see the view. This is a known problem with the application, and will be fixed in a future release.

view image::completedview.png[The completed view,width="6in",align="center"]

9.9.2. Creating Subviews

In order to create sub-views, you first need to select the "Create New Sub-View" item from the "Views" main menu item. The first dialog box will prompt you to select the view (or sub-view) to base the new sub-view on:

view image::subview.png[Selecting the base view,width="2in",align="center"]

After selecting the base view or sub-view, the options for creating the new sub-view are the same as creating a new view. After creating the sub-view, you will need to exit PerfExplorer and re-start it to see the sub-view. This is a known problem with the application, and will be fixed in a future release.

sub-views image::completedsubview.png[Completed sub-views,width="6in",align="center"]

9.10. Running PerfExplorer Scripts

As of version 2.0, PerfExplorer has officially supported a scripting interface. The scripting interface is useful for adding automation to PerfExplorer. For example, a user can load a trial, perform data reduction, extract out key phases, derive metrics, and plot the result.

9.10.1. Analysis Components

There are many operations available, including:

-

BasicStatisticsOperation

-

CopyOperation

-

CorrelateEventsWithMetadata

-

CorrelationOperation

-

DeriveMetricOperation

-

DifferenceMetadataOperation

-

DifferenceOperation

-

DrawBoxChartGraph

-

DrawGraph

-

DrawMMMGraph

-

ExtractCallpathEventOperation

-

ExtractEventOperation

-

ExtractMetricOperation

-

ExtractNonCallpathEventOperation

-

ExtractPhasesOperation

-

ExtractRankOperation

-

KMeansOperation

-

LinearRegressionOperation

-

LogarithmicOperation

-

MergeTrialsOperation

-

MetadataClusterOperation

-

PCAOperation

-

RatioOperation

-

ScalabilityOperation

-

TopXEvents

-

TopXPercentEvents

9.10.2. Scripting Interface

The scripting interface is in Python, and scripts can be used to build analysis workflows. The Python scripts control the Java classes in the application through the Jython interpreter (http://www.jython.org/). There are two types of components which are useful in building analysis scripts. The first type is the PerformanceResult interface, and the second is the PerformanceAnalysisComponent interface. For documentation on how to use the Java classes, see the javadoc in the perfexplorer source distribution, and the example scripts below. To build the perfexplorer javadoc, type

%>./make javadoc in the perfexplorer source directory.

in the perfexplorer source directory.

9.10.3. Example Script

from glue import PerformanceResult

from glue import PerformanceAnalysisOperation

from glue import ExtractEventOperation

from glue import Utilities

from glue import BasicStatisticsOperation

from glue import DeriveMetricOperation

from glue import MergeTrialsOperation

from glue import TrialResult

from glue import AbstractResult

from glue import DrawMMMGraph

from edu.uoregon.tau.perfdmf import Trial

from java.util import HashSet

from java.util import ArrayList

True = 1

False = 0

def glue():

print "doing phase test for gtc on jaguar"

# load the trial

Utilities.setSession("perfdmf.demo")

trial1 = Utilities.getTrial("gtc_bench", "Jaguar Compiler Options", "fastsse")

result1 = TrialResult(trial1)

print "got the data"

# get the iteration inclusive totals

events = ArrayList()

for event in result1.getEvents():

#if event.find("Iteration") >= 0 and result1.getEventGroupName(event).find("TAU_PHASE") < 0:

if event.find("Iteration") >= 0 and event.find("=>") < 0:

events.add(event)

extractor = ExtractEventOperation(result1, events)

extracted = extractor.processData().get(0)

print "extracted phases"

# derive metrics

derivor = DeriveMetricOperation(extracted, "PAPI_L1_TCA", "PAPI_L1_TCM", DeriveMetricOperation.SUBTRACT)

derived = derivor.processData().get(0)

merger = MergeTrialsOperation(extracted)

merger.addInput(derived)

extracted = merger.processData().get(0)

derivor = DeriveMetricOperation(extracted, "PAPI_L1_TCA-PAPI_L1_TCM", "PAPI_L1_TCA", DeriveMetricOperation.DIVIDE)

derived = derivor.processData().get(0)

merger = MergeTrialsOperation(extracted)

merger.addInput(derived)

extracted = merger.processData().get(0)

derivor = DeriveMetricOperation(extracted, "PAPI_L1_TCM", "PAPI_L2_TCM", DeriveMetricOperation.SUBTRACT)

derived = derivor.processData().get(0)

merger = MergeTrialsOperation(extracted)

merger.addInput(derived)

extracted = merger.processData().get(0)

derivor = DeriveMetricOperation(extracted, "PAPI_L1_TCM-PAPI_L2_TCM", "PAPI_L1_TCM", DeriveMetricOperation.DIVIDE)

derived = derivor.processData().get(0)

merger = MergeTrialsOperation(extracted)

merger.addInput(derived)

extracted = merger.processData().get(0)

derivor = DeriveMetricOperation(extracted, "PAPI_FP_INS", "P_WALL_CLOCK_TIME", DeriveMetricOperation.DIVIDE)

derived = derivor.processData().get(0)

merger = MergeTrialsOperation(extracted)

merger.addInput(derived)

extracted = merger.processData().get(0)

derivor = DeriveMetricOperation(extracted, "PAPI_FP_INS", "PAPI_TOT_INS", DeriveMetricOperation.DIVIDE)

derived = derivor.processData().get(0)

merger = MergeTrialsOperation(extracted)

merger.addInput(derived)

extracted = merger.processData().get(0)

print "derived metrics..."

# get the Statistics

dostats = BasicStatisticsOperation(extracted, False)

stats = dostats.processData()

print "got stats..."

return

for metric in stats.get(0).getMetrics():

grapher = DrawMMMGraph(stats)

metrics = HashSet()

metrics.add(metric)

grapher.set_metrics(metrics)

grapher.setTitle("GTC Phase Breakdown: " + metric)

grapher.setSeriesType(DrawMMMGraph.TRIALNAME);

grapher.setCategoryType(DrawMMMGraph.EVENTNAME)